NVIDIA Superchip Expanded To Blackwell GPUs: GB200 Grace Blackwell Superchip With 40 PFLOPs AI, 864 GB Memory

NVIDIA Superchip Expanded To Blackwell GPUs: GB200 Grace Blackwell Superchip With 40 PFLOPs AI, 864 GB Memory

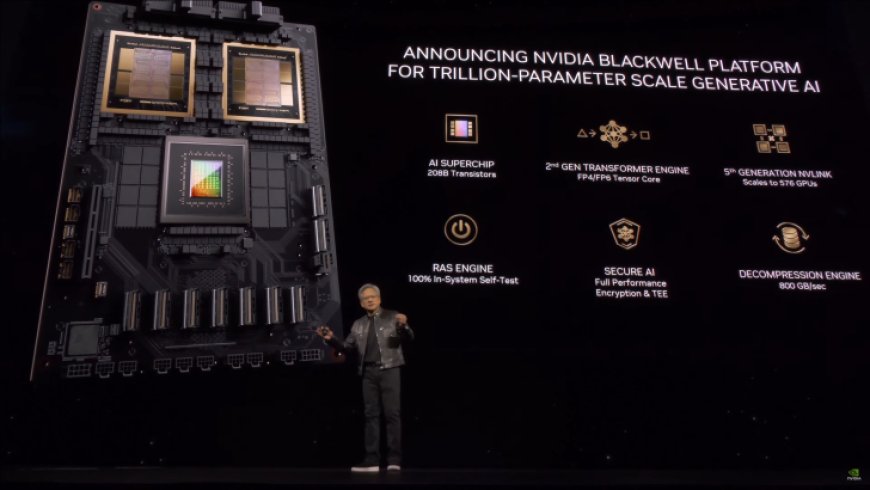

NVIDIA has announced its latest DGX & Superchip platforms featuring its brand new Blackwell B200 GPUs alongside the Grace CPU.

NVIDIA's Superchip is designed as the quintessential platform for AI and HPC workloads. As we've seen before, the platform comes in two configurations, a CPU only and a CPU+GPU variant. These Superchip products were first announced with Hopper H100 GPUs coupled alongside the first-gen Arm-based Grace CPU. Today, NVIDIA is introducing the next chapter of Superchip.

The company announced two new solutions, the GB200 which is a combination of the Grace CPU with the Blackwell B100 GPU. This solution is aiming for launch in 2024 and will feature the same 192 GB HBM3e memory capacity at up to 2700W. So let's take a deep dive into the specifications. You are also getting the latest protocol support with PCIe 6.0 (2x 256 GB/s). Blackwell B200 GPUs will be compatible with both existing Hopper platforms and the latest solutions from data center manufacturers.

Each GB200 Grace Blackwell Superchip will be equipped with two B200 AI GPUs and a singular Grace CPU with 72 of the same ARM Neoverse V2 cores. The platform will offer 40 PetaFlops of computing performance (INT8) and offer a massive 864 GB memory pool with the HBM alone at 16TB/s of memory bandwidth. The chips will be interconnected using a fast 3.6 TB/s NVLINK bandwidth.

Two of these GB200 Grace Blackwell Superchip platforms will be incorporated within a Blackwell Compute node for up to 80 PetaFLOPs of AI performance, 1.7 TB of HBM3e memory, 32 TB/s of memory bandwidth, and all in a liquid-cooled MGX package.

These chips will be packaged within brand-new GB200 NVL72 compute platforms, each outfitted with 18 compute trays in a rack for up to 36 Grace CPUs and 72 Blackwell GPUs. Each Rack will feature the ConnectX-800G Infiniband SuperNIC and a Bluefield-3 DPU (80 GB/s of memory bandwidth) for in-network computing. The company is also housing its latest NVLINK Switches which feature eight ports at 1.8 TB/s and up to 14.4 TB/s of aggregate bandwidth.

We have to talk a little bit more about the NVLink Switch itself since it is a 50 Billion transistor juggernaut that is also fabricated on the same TSMC 4NP node, houses 72-ports dual 200 GB/s SerDes, 4 NVLINKs at 1.8 TB/s, 7.2 TB/s of Full-Duplex Bandwidth and 3.6 TFLOPs of SHARP in-network compute capabilities.

In terms of performance, the GB200 Grace Blackwell Superchip platform will offer a 30x boost in AI performance over the H200 Grace Hopper platform. The NVIDIA Blackwell GB200 will be available on DGX Cloud later this year while leading OEMs such as Dell, Cisco, HPE, Lenovo, Supermicro, Aivres, ASRock Rack, ASUS, Eviden, Foxconn, Gigabyte, Inventec, Pegatron, QCT, Wistron, Wiwynn & ZT Systems will also be offering their respective solutions down the road.

What's Your Reaction?