NVIDIA Readies “Scaled-Down” Blackwell B200A AI Accelerator, Targeting The Wider Enterprise & AI Market

NVIDIA Readies “Scaled-Down” Blackwell B200A AI Accelerator, Targeting The Wider Enterprise & AI Market

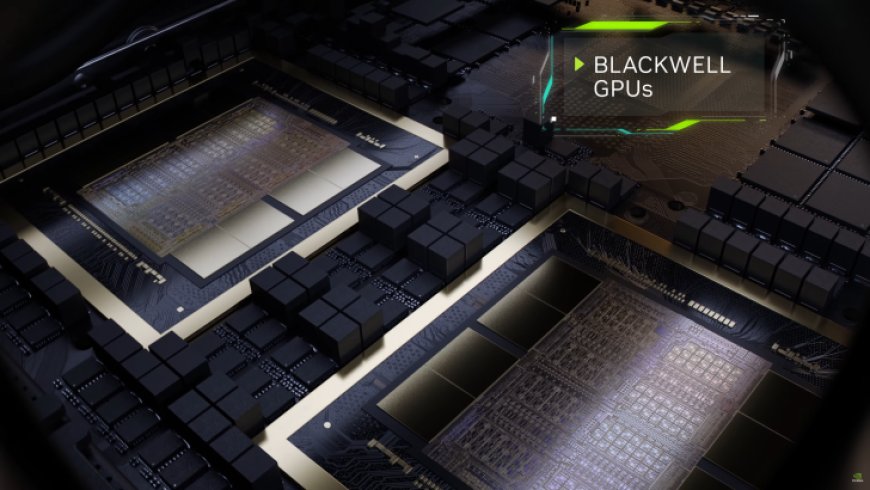

NVIDIA is readying a scaled-down Blackwell AI accelerator called the B200A which is expected to target a wider market.

NVIDIA's Blackwell architecture has become a victim of market rumors since multiple sources over the internet have reported that NVIDIA's next-gen AI products will see a delayed entry into the market, being pushed ahead by at least a quarter. However, TrendForce, one of the most reputable sources for market conditions out there, claims that NVIDIA's Blackwell is right on schedule and that the company's B100 and B200 AI GPUs are slated to enter the markets by the next quarter, presumably the Q4 2024.

TrendForce notes that Blackwell is currently in the early stages of shipping, which means that NVIDIA is sampling it out with customers, as disclosed previously.

This shows that the delay rumors swirling around the markets are overblown, & NVIDIA's Blackwell seems to be in the right place for now. Interestingly, TrendForce notes that Team Green initially plans on focusing on the production of the Blackwell AI accelerators, claiming that they are in high demand.

Another interesting fact noted by TrendForce is that NVIDIA plans to ship a relatively lower-end variant of the Blackwell AI GPUs called the B200A. It claims that it's a scaled-down model designed to cater to the larger part of the AI markets, which are enterprise clients and AI startups.

For specifications, the Blackwell B200A AI accelerator is expected to feature four HBM3E 12-Hi memory sites, with a total capacity rated at 144 GB. For comparison, the standard Blackwell chips come in 8-Hi and 8-Stack solutions, offering 144 GB and up to 288 GB capacities. Apart from this, the accelerator will employ air-cooling, allowing NVIDIA to ensure an adequate supply of the B200A to its customers.

In terms of Blackwell entering the mainstream markets, TrendForce says that the supply chain is expected to be filled with NVIDIA's next-gen AI products by 2025, with AI accelerators leading the way, followed by in-demand servers as well. By next year, Blackwell will account for 80% of NVIDIA's overall shipments, and ultimately, 2025 will prove to be a year for NVIDIA's next "gold rush," fueled by the AI hype.

What's Your Reaction?