NVIDIA Once Again Proves Why It’s The AI Boss: Sweeps All MLPerf Training Benchmarks, Achieves Near-Perfect Scaling In GPT-175B, Hopper Now 30% Faster

NVIDIA Once Again Proves Why It’s The AI Boss: Sweeps All MLPerf Training Benchmarks, Achieves Near-Perfect Scaling In GPT-175B, Hopper Now 30% Faster

NVIDIA has once again showcased its might in MLPerf, posting nearly 100% efficiency and huge uplifts with Hopper H100 & H200 GPUs in massive models such as GPT-3 175B.

You can't say AI without mentioning NVIDIA and the company has proven it once again with its latest MLPerf Training V4.0 benchmark submissions where it dominated across all fronts. NVIDIA states that AI computational requirements continue to increase at an explosive pace and since the introduction of transformers, we have seen a 256x growth in requirement within just 2 years.

The other aspect is performance and the higher the performance there is, the more ROI (Return on investment) it generates for business. NVIDIA shares three segments & how performance matters in each of them.

First is Training where there is the need to have more intelligent modes that are faster to train. The second is Inference which includes interactive user experiences which include the likes of ChatGPT where users expect to have an instant response for a query they just entered. NVIDIA recently mentioned in its earnings call that there's an opportunity for LLM service providers to generate $7 in revenue for every $1 that they have invested over 4 years & that is quite huge for businesses.

Great AI performance translates into significant business opportunities. For example, in our recent earnings call, we described how LLM service providers can turn a single dollar invested into seven dollars in just four years running the Llama 3 70B model on NVIDIA HGX H200 servers. This return assumes a LLM service provider serving Llama 3 70B at $0.60/M tokens, with an HGX H200 server throughput of 24,000 tokens/second.

NVIDIA H200 GPU Supercharges Generative AI and HPC

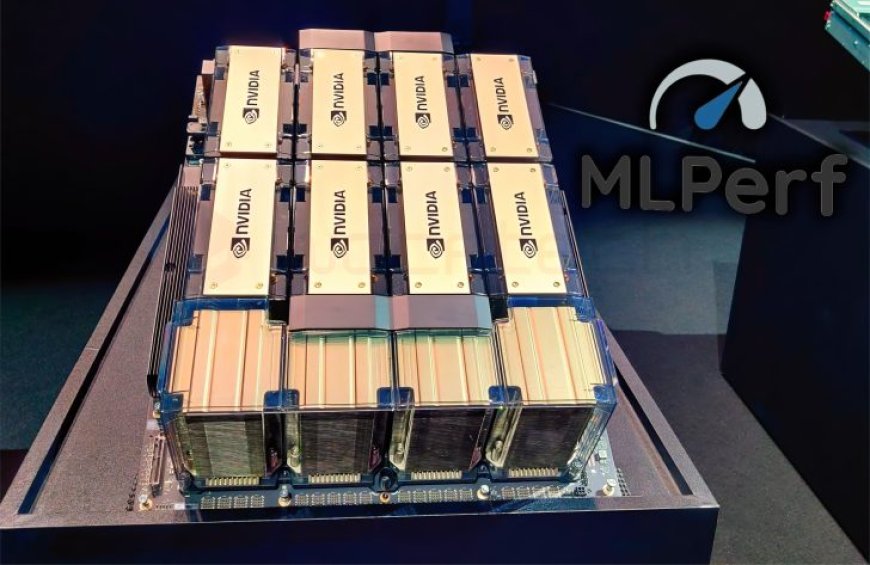

The NVIDIA H200 Tensor GPU builds upon the strength of the Hopper architecture, with 141GB of HBM3 memory and over 40% more memory bandwidth compared to the H100 GPU. Pushing the boundaries of what’s possible in AI training, the NVIDIA H200 Tensor Core GPU extended the H100’s performance by 14% in its MLPerf Training debut.

NVIDIA Software Drives Unmatched Performance Gains

Additionally, our submissions using a 512 H100 GPU configuration are now up to 27% faster compared to just one year ago due to numerous optimizations to the NVIDIA software stack. This improvement highlights how continuous software enhancements can significantly boost performance, even with the same hardware.

The result of this work is a 3.2x performance increase in just a year coming from a larger scale, and significant software improvements. This combination also delivered nearly perfect scaling — as the number of GPUs increased by 3.2x, so did the delivered performance.

Excelling at LLM Fine-Tuning

As enterprises seek to customize pretrained large language models, LLM fine-tuning is becoming a key industry workload. MLPerf introduced a new LLM fine-tuning benchmark this round, based on the popular low-rank adaptation (LoRA) technique applied to Meta Llama 2 70B.

The NVIDIA platform excelled at this task, scaling easily from eight to 1,024 GPUs. This means that NVIDIA’s platform can handle both small- and large-scale AI tasks efficiently, making it versatile for various business needs.

Accelerating Stable Diffusion and GNN Training

NVIDIA also accelerated Stable Diffusion v2 training performance by up to 80% at the same system scales submitted last round.

So how is NVIDIA doing in terms of the latest MLPerf Training v4.0 performance benchmarks, well they shattered every single performance benchmark that they had already set, achieving five new world records while doing so.

The numbers are below:

Not only that, but NVIDIA also achieved 3.2x performance since its last year's submission. The EOS-DFW superpod now features 11,616 H100 GPUs (versus 3584 GPUs in June 2023) which are interconnected using the fast NVIDIA 400G Quantum-2 InfiniBand interconnect.

NVIDIA also states that the new and improved software stack helped achieve near-perfect scaling on such a large scale in training workloads such as GPT-3 175B. And why this scaling matters because NVIDIA is now building large-scale AI factories equipped with 100,000 to up to 300,000 GPUs. One of these AI factories with Hopper GPUs will be coming online later this year and a new Blackwell factory is expected to go live by 2025.

But it's not just scaling performance that is getting better, Hopper GPUs too are seeing upgrades. The latest full-stack optimizations have now boosted the performance of H100 GPUs by another 27% in the latest benchmarks which is possible through several new integrations such as:

In Text-To-Image Training performance, NVIDIA highlights a performance gain of 80% with Hopper GPUs which was achieved in just seven months. The optimizations that made this increase possible include Full-Iteration CUDA Graph, Distributed Optimizer, and Optimized Convolutions, & GEMMs.

Moving over to the NVIDIA HGX H200 Hopper platform, the new chips were able to deliver the fastest performance in Llama 2 70B fine-tuning and smashing the MLPerf v4.0 benchmark. The Hopper H200 GPU was 3.2x faster than Intel's Gaudi 2 in Llama 2 70B Fine-Tuning performance while being 4.7x faster than Gaudi 2 in the Llama 2 70B Inference Performance. All GPUs were tested with an 8-accelerator configuration.

The improvements made within Hopper since 2023 such as 2.5x higher throughput and 1.5x higher Batch 1 performance across 70B inference workloads have made NVIDIA update its "The More You Buy, The More You Save" tagline to "The More You Buy, The More You Make".

Even while covering these benchmarks, NVIDIA is teasing even bigger performance uplifts coming for H100 and H200 GPUs in the upcoming software stack.

If you think NVIDIA makes crazy hardware, then their CUDA and software team is an entirely different breed that keeps on showcasing their engineering and tuning expertise quarter after quarter.

What's Your Reaction?