NVIDIA Hopper H200 GPU Continues To Dominate In Latest MLPerf 4.0 Results: Up To 3x Gain In GenAI With TensorRT-LLM

NVIDIA Hopper H200 GPU Continues To Dominate In Latest MLPerf 4.0 Results: Up To 3x Gain In GenAI With TensorRT-LLM

NVIDIA continues to push the AI envelope with its strong TensorRT-LLM suite, boosting the H200 GPUs to new heights in the latest MLPerf v4.0 results.

Generative AI or GenAI is an emerging market and all hardware manufacturers are trying to grab their slice of the cake. But despite their best efforts, it's NVIDIA that has so far taken the bulk of the share and there's no stopping the green giant as it has showcased some utterly strong benchmarks and records within the MLPerf v4.0 inference results.

Fine-tuning on TensorRT-LLM has been ongoing ever since the AI Software suite was released last year. We saw a major increase in performance with the previous MLPerf v3.1 results & now with MLPerf v4.0, NVIDIA is supercharging Hopper's performance. Why inference matters is because it accounts for 40% of the data center revenue (generated last year). Inference workloads range from LLMs (Large Language Models), Visual Content, and Recommenders. As these models increase in size, there comes more complexity and the need to have both strong hardware and software.

That's why TensorRT-LLM is there as a state-of-the-art inference compiler that is co-designed with the NVIDIA GPU architectures. Some features of TensorRT-LLMs include:

Using the latest TensorRT-LLM optimizations, NVIDIA has managed to squeeze in an additional 2.9x performance for its Hopper GPUs (such as the H100) in MLPerf v4.0 versus MLPerf v3.1. In today's benchmark results, NVIDIA has set new performance records in MLPerf Llama 2 (70 Billion) with up to 31,712 tokens generated per second on the H200 (Preview) and 21,806 tokens generated per second on the H100.

It should be mentioned that the H200 GPU was benchmarked about a month ago which is why it's mentioned in the preview state but NVIDIA has stated that they are already sampling the GPUs to customers and will be shipping them in the second quarter.

The NVIDIA H200 GPU manages to offer an additional 45% performance gain in Llama 2 versus the H100 GPUs thanks to its higher memory configuration of 141 GB HBM3E and faster bandwidth of up to 4.8 TB/s. Meanwhile, the H200 is a behemoth against Intel's Gaudi 2, the only other competitor solution submitted within the MLPerf v4.0 benchmarks while the H100 also sits in at a massive 2.7x gain.

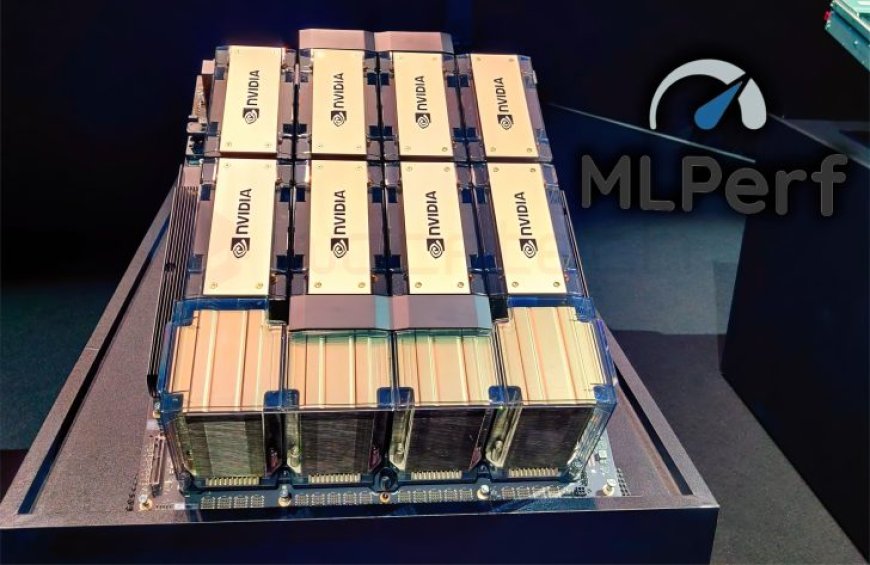

In addition to these, an 8 GPU NVIDIA HGX H200 GPU system shattered the Stable Diffusion XL benchmark, achieving 13.8 queries/second & 13.7 samples/second in server & offline scenarios, respectively.

It doesn't stop there, while H200 is drop-in compatible with H100 platforms, a custom thermal design variant of the H200 also exists in the form of the MGX platform (GPU+CPU+DPU) which can boost the TDP up to 1000W for up to 14% higher performance versus the standard air-cooled variant. The custom solutions are available from OEMs such as ASRock Rack, ASUS, Gigabyte, Pegatron, QCT, and Supermicro. Plus, H200 AI GPUs are also expected to be available from a wide list of NVIDIA's CSP and OEM partners.

NVIDIA's Hopper H200 GPUs ship with 700W base TDPs and custom designs of up to 1000W. The Blackwell GPUs come in 700W (B100), and 1000/1200W (B200) configurations. Talking about the Blackwell GPUs, NVIDIA confirmed that only B100 GPUs will be drop-in compatible with Hopper systems while B200 GPUs will require a completely different chassis and system design. The first Blackwell systems will be shipping to market later this year so we can expect results in MLPerf for those in future submissions.

What's Your Reaction?