NVIDIA H100 NVL GPU With 94 GB HBM3 Memory Is Designed Exclusively For ChatGPT

NVIDIA H100 NVL GPU With 94 GB HBM3 Memory Is Designed Exclusively For ChatGPT

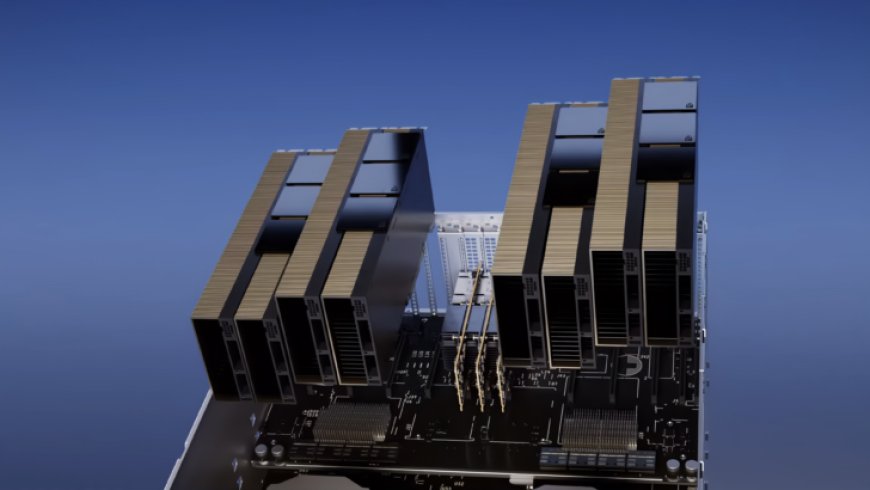

NVIDIA has just unveiled its brand new Hopper H100 NVL GPU with 94 GB HBM3 memory which is designed exclusively for ChatGPT.

The NVIDIA Hopper GPU-powered H100 NVL PCIe graphics card is said to feature a dual-GPU NVLINK interconnect with each chip featuring 94 GB of HBM3e memory. The GPU is able to process up to 175 Billion ChatGPT parameters on the go. Four of these GPUs in a single server can offer up to 10x the speed up compared to a traditional DGX A100 server with up to 8 GPUs.

Unlike the H100 SXM5 configuration, the H100 PCIe offers cut-down specifications, featuring 114 SMs enabled out of the full 144 SMs of the GH100 GPU and 132 SMs on the H100 SXM. The chip as such offers 3200 FP8, 1600 TF16, and 48 TFLOPs of FP64 compute horsepower. It also features 456 Tensor & Texture Units.

Due to its lower peak compute horsepower, the H100 PCIe should operate at lower clocks and as such, features a TDP of 350W versus the double 700W TDP of the SXM5 variant. But the PCIe card will retain its 80 GB memory featured across a 5120-bit bus interface but in HBM2e variation (>2 TB/s bandwidth).

The non-tensor FP32 TFLOPs for the H100 are rated at 48 TFLOPs while the MI210 has a peak-rated FP32 compute power of 45.3 TFLOPs. With Sparsity and Tensor operations, the H100 can output up to 800 TFLOPs of FP32 horsepower. The H100 also rocks higher 80 GB memory capacities versus the 64 GB on the MI210. From the looks of it, NVIDIA is charging a premium for its higher AI/ML capabilities.

What's Your Reaction?