NVIDIA Grace CPU Delivers Up To 30% Higher Performance At 70% Better Efficiency Versus Latest x86 Data Center Chips

NVIDIA Grace CPU Delivers Up To 30% Higher Performance At 70% Better Efficiency Versus Latest x86 Data Center Chips

NVIDIA has announced sampling of its Grace CPU Superchip which will be delivering some major performance efficiency gains versus x86 chips.

NVIDIA first announced its Grace CPU and the respective Superchip design at GTC 2022. The Grace CPU is NVIDIA's first processor based on a custom Arm architecture that will be aiming at the server / HPC segment. The CPU comes in two Superchip configurations, a Grace Superchip module with two Grace CPUs and a Grace+Hopper Superchip with one Grace CPU connected to a Hopper H100 GPU.

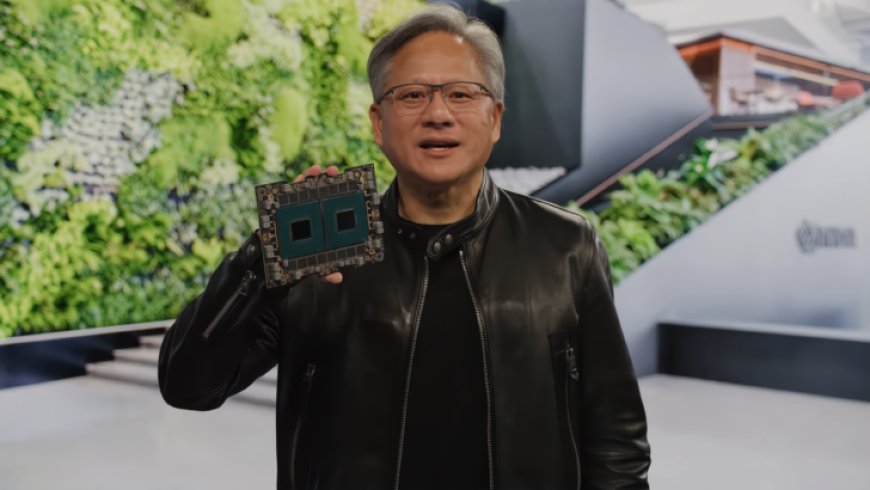

Today, at GTC 2023, NVIDIA unveiled the Grace CPU Superchip for the first time to the public. The whole unit measures 5 x 8 inches and can be both air-cooled and passive-cooled. NVIDIA showed both, a standard passive heatsink and a large 1U rack heatsink design. Two Grace CPU Superchip modules can fit within a single 1U air-cooled server.

The company also shared some new performance metrics in Microservices and Big Data workloads where the NVIDIA Grace CPU Superchip was able to beat the latest class of x86 CPUs from Intel and AMD by up to 30% while delivering 70% higher efficiency and 2x the data throughput. NVIDIA states that CSPs can outfit a power-limited data center with 1.7 times more Grace servers, each delivering 25% higher throughput. At ISO power, Grace CPU Superchip gives CSPs 2x the growth opportunity.

Some of the main highlights of Grace include:

Being NVIDIA's first server CPU, Grace features 72 Arm v9.0 cores that offer support for SVE2 and various virtualization extensions such as Nested Virtualization and S-EL2. The CPU is fabricated on TSMC's 4N process node, an optimized version of the 5nm process node which is made exclusively for NVIDIA. The new architecture can provide up to 7.1 TFLOPs of peak FP64 performance.

Grace is designed to be paired and as such, one of the most crucial aspects of the design is its C2C (Chip-To-Chip) interconnect. Grace achieves this with NVLINK which is used to make the Superchips and removes all bottlenecks that are associated with a typical cross-socket configuration.

The C2C NVLINK interconnect provides 900 GB/s of raw bi-directional bandwidth (same bandwidth as a GPU to GPU NVLINK switch on Hopper), while running at a very low power interface of just 1.3 pJ/bit or 5 times more efficient than the PCIe protocol.

The NVIDIA Grace CPU features a scalable coherency fabric with a distributed cache design. The chip has up to 3.225 TB/s of bi-section bandwidth, is scalable beyond 72 cores (144 on Superchip), integrates 117 MB of L3 cache per core or 234 MB per Superchip, and features support for Arm memory partitioning and monitoring (MPAM). Grace also allows for a unified memory architecture with shared page tables. Two NVIDIA Grace+Hopper Superchips can be interconnected together through an NVSwitch and a Grace CPU on one Superchip can directly communicate with the GPU on the other chip or even access its VRAM at native NVLINK speeds.

Getting a closer look at the memory design of Grace, NVIDIA is utilizing up to 960 GB of LPDDR5X (ECC) across 32 channels, delivering up to 1 TB/s memory bandwidth. NVIDIA states that LPDDR5X provides the best value when keeping in mind the overall bandwidth, cost, and power requirement. For example, versus DDR5, the LPDDR5X subsystem provides 53% more bandwidth at one-eighth the power per gigabyte per second and at a similar cost. Additionally, HBM2e memory could have provided more bandwidth and efficiency but at 3x the cost.

For I/O, you get 68 PCIe Gen 5.0 lanes, four of which can be used for x16 links at 128 GB/s, and the rest of the two are used for MISC. There are also 12 lanes of coherent NVLINK lanes shared with two Gen 5 PCIe x16 links.

As for TDP, the NVIDIA Grace (CPU Only) Superchip is optimized for single-core performance and offers up to 1 TB/s of memory bandwidth and a TDP of 500W for the 144-core dual chip config.

NVIDIA also confirmed that the Grace CPU Superchip is now sampling and leading partners such as ASUS, ATOS, Gigabyte, HPE, Supermicro,m Wistron, ZT Systems, and QCT are building systems now.

What's Your Reaction?