NVIDIA Deep-Dives Into Blackwell Infrastructure: NV-HBI Used To Fuse Two AI GPUs Together, 5th Gen Tensor Cores, 5th Gen NVLINK & Spectrum-X Detailed

NVIDIA Deep-Dives Into Blackwell Infrastructure: NV-HBI Used To Fuse Two AI GPUs Together, 5th Gen Tensor Cores, 5th Gen NVLINK & Spectrum-X Detailed

NVIDIA has given a deep dive into its Blackwell AI platform & how it leverages a new high-bandwidth interface to fuse two GPUs.

Last week, NVIDIA announced that it was sharing more information on its Blackwell AI platform while sharing the first images of Blackwell up and running at data centers.

Today, the company presented its latest details regarding the whole Blackwell platform which doesn't comprise one chip but uses several different products such as:

The whole NVIDIA Blackwell AI platform is powered by over 400 "Optimized" CUDA-X libraries that offer maximum performance on Blackwell chips. These libraries target diverse application domains and are built on decade-long innovations, stacked within the CUDA-X package. The library supports an ever-expanding set of algorithms, making it future-proof for the next generation of AI models.

So let's talk about Blackwell, the chip has six main building blocks, the AI Superchip with 208 billion transistors, the transformer engine which supports FP4/FP6 data formats through its tensor core, a Secure AI engine with full performance encryption and TEE, 5th Gen NVLINK which scales to 576 AI GPUs, a RAS engine with 100% in-system self-test capabilities and the Decompression engine with a 800 GB/s bandwidth.

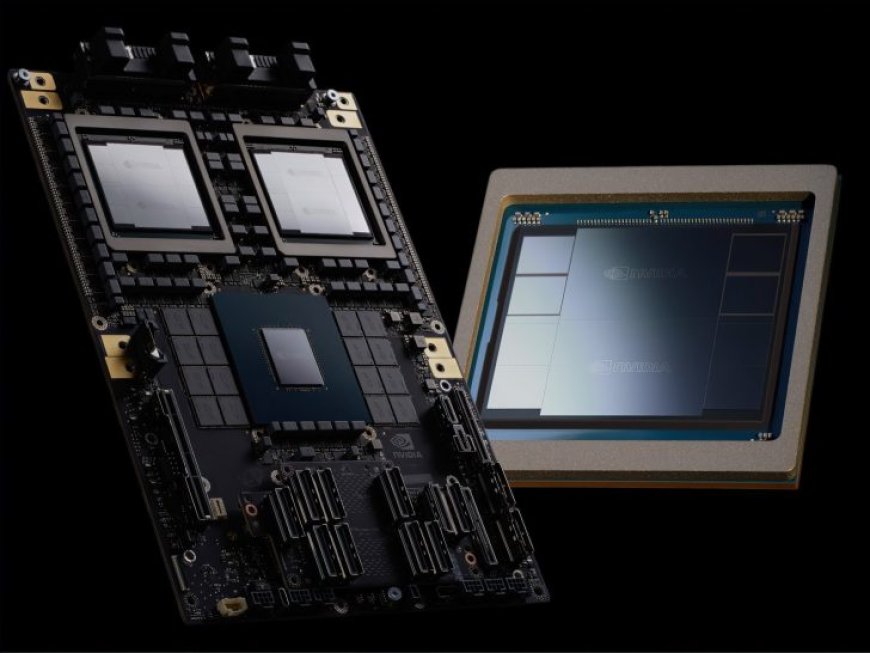

The NVIDIA Blackwell GPU itself features the highest AI compute, memory bandwidth, & interconnect bandwidth ever in a single GPU. The GPU utilizes two reticle-limited GPUs merged into one using NV-HBI which we will get to in a bit. The chip itself features 208 Billion transistors packaged on the TSMC 4NP process node in a >1600mm2 design. The Blackwell AI GPU offers 20 PetaFLOPS FP4 AI, 8 TB/s memory bandwidth (8-site on HBM3e), 1.8 TB/s Bidirectional NVLINK bandwidth, and a high-speed NVLINK-C2C link to the Grace CPU.

NVIDIA's journey into multi-die architectures started with Ampere. Although not a traditional MCM design, the two GPU blocks were fused in such a way using a high-bandwidth interconnect that the chip had no differences from a monolithic implementation.

This design was further refined over the coming generations and with Blackwell, the company moved towards a 2-die implementation. The chips were fused using NV-HBI (NVIDIA High-Bandwidth Interface) which offers 10 TB/s of bi-directional bandwidth across the single edge, consumes very low energy per bit, delivering a coherent link between GPUs that's both, great performance and no comprise solution.

The Blackwell GPU architecture is also supercharged with the 5th Generation Tensor Core architecture which comes with new micro-tensor scaled FP formats such as FP4, FP6, and FP8. These Micro-tensor scale factors are applied to fixed-length vectors, enable mapping of elements to scale factors that are fixed, and deliver wider FP-range, amplified bandwidth, lower power, and finer granularity quantization.

Looking at the performance implications of the 5th Gen Tensor Cores, each of the existing data formats (FP16, BF16, FP8) sees a 2x speedup per clock per SM versus Hopper while FP6 delivers a 2x speedup over Hopper's FP8 and FP4 delivers a 4x speedup over Hopper's FP8 format. In addition to the new formats, Blackwell AI GPUs also feature increased operating frequencies and SM counts vs Hopper chips.

One of the newest features for Blackwell is NVIDIA Quasar Quantization which takes low-precision formats such as FP4 and converts them into high-accuracy data using optimized libraries, HW & SW transformer engines, and low-precision numerical algorithms. Compared to BF16, Quantized FP4 offers the same MMLU scores in LLMs and the same accuracy across Nemotron-4 15B and even 340B models.

Another big aspect of the NVIDIA Blackwell AI platform is the 5th Gen NVLINK which connects the entire platform using 18 NVLINKs with 100 GB/s bandwidth each for 1.8 TB/s bandwidth and x2@200 Gbps-PAM4.

There's also the 4th Gen NVLINK Switch chip which is configured within the NVLINK Switch Tray and feature a die size of over 800mm2 (TSMC 4NP). These chips extend NVLINK to 72 GPUs on GB200 NVL72 racks, offering 7.2 TB/s of full all-to-all bidirectional bandwidth over 72 ports and SHARP in-network compute of 3.6 TFLOPs. The Tray features two of these switches with a combined bandwidth of 14.4 TB/s.

All of this comes together in the NVIDIA GB200 Grace Blackwell Superchip, an AI computing powerhouse with 1 Grace CPU and 2 Blackwell GPUs (four GPU dies). The board features NVLINK-C2C interconnect and offers 40 PetaFLOPS of FP4 and 20 PetaFLOPS of FP8 compute. A single Grace Blackwell tray comes packed with 2 Grace CPUs (72 cores each) and 4 Blackwell GPUs (8 GPU dies).

The NVLINK Spine is then used in GB200 NVL72 and NVL36 servers which offer up to 36 Grace CPUs, 72 Blackwell GPUs, all fully connected using the NVLINK Switch rack. This server offers 720 PetaFLOPS of Training, 1440 PetaFLOPs of Inference, supports up to 27 Trillion parameter model sizes, and bandwidth of up to 130 TB/s (multi-node).

Lastly, there's Spectrum-X which is the world's first Ethernet fabric built for AI and comprises two chips, the Spectrum-4 with 100 Billion transistors, 51.2T bandwidth, 64x 800G & 128x 400G ports, and the Bluefield-3 DPU with 16 Arm A78 cores, 256 Threads and 400 Gb/s Ethernet. These two AI Ethernet chips come together in the Spectrum-X800 rack which is an end-to-end platform for cloud AI workloads.

Combined, NVIDIA's Blackwell AI platform brings a 30x real-time inference uplift over Hopper while offering a 25x uplift in energy efficiency. But NVIDIA is just getting started, after Blackwell, the green team also plans on launching its Blackwell Ultra with increased compute density and memory in 2025 followed by Rubin and Rubin Ultra with HBM4 and brand new architectures in 2026-2027. The whole CPU, networking, and interconnect ecosystem will also receive major updates throughout 2025-2027.

What's Your Reaction?