AMD Breaks Down Instinct MI300X MCM GPU: Full Chip Packs 320 “CDNA 3” Compute Units, 192 GB HBM3 With 288 GB HBM3e Upgrade This Year

AMD Breaks Down Instinct MI300X MCM GPU: Full Chip Packs 320 “CDNA 3” Compute Units, 192 GB HBM3 With 288 GB HBM3e Upgrade This Year

AMD has detailed its Instinct MI300X "CDNA 3" GPUs ahead of its MI325X launch next quarter, detailing the GPU structure designed for AI workloads.

AMD's MI300X is the third iteration of the Instinct accelerators which has been designed for the AI computing segment. The chip also comes in the MI300A flavor which is an exascale-APU optimized part that offers a combination of Zen 5 cores in two chiplets while the remaining leverage CDNA 3 GPU cores.

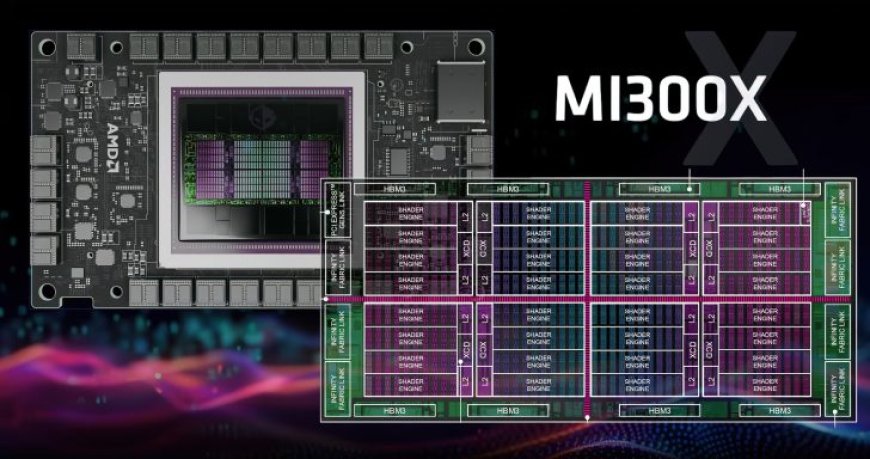

AMD has broken down the whole Instinct MI300X to give us an exact representation of what lays underneath the hood of this massive AI product. For starters, the AMD Instinct MI300X features a total of 153 billion transistors, featuring a mix of TSMC 5nm and 6nm FinFET process nodes. The eight chaplets feature four shared engines and each shared engine packs 10 compute units.

The whole chip packs 32 shader engines with a total of 40 shader engines in a singular XCD and 320 in total on the entire package. Each XCD has its dedicated L2 cache and the outskirts of the package feature the Infinity Fabric Link, 8 HBM3 IO sites, and a single PCIe Gen 5.0 link with 128 GB/s of total bandwidth which connects the MI300X to an AMD EPYC CPU.

AMD is using the fourth-generation Infinity Fabric on its Instinct MI300X chip which offers up to 896 GB/s of bandwidth. The chip also incorporates an Infinity Fabric Advanced Package link which connects all chips using 4.8 TB/s of bisection bandwidth while the XCD/IOD interface is rated at 2.1 TB/s bandwidth.

Diving into the CDNA 3 architecture itself, the latest design includes:

The full block diagram of the Mi300X architecture is shared below and you can see that each XCD has two compute units disabled which total 304 CUs out of the full 320 CU design. The full chip is configured with 20,480 cores while the MI300X is configured with 19,456 cores. There's also 256MB of dedicated Infinity Cache onboard the chip.

The full breakdown of the cache and memory hierarchy on the MI300X is visualized below:

Each CDNA compute unit is composed of a scheduler, local data share, vector registers, vector units, matrix core, and L1 cache. Coming to the performance figures, the MI300X offers:

AMD's Instinct MI300X is also the first accelerator to feature an 8-stack HBM3 memory design with NVIDIA following up with its Blackwell GPUs later this year. The new 8-site design allowed AMD to achieve 1.5x higher capacity while the new HBM3 standard delivered a 1.6x increase in bandwidth versus the MI250X.

AMD also states that its larger and faster memory configuration on the Instinct Mi300X allows them to handle bigger LLM (FP16) sizes of up to 70B in Training and 680B in Inference while NVIDIA HGX H100 systems can only sustain model sizes of up to 30B in Training and 290B in inference.

One interesting feature of the Instinct Mi300X is AMD's Spatial portioning which allows users to partition the XCDs as per the demands of their workloads. All XCDs operate together as a single processor but can also be partitioned and grouped to appear as multiple GPUs.

AMD will be upgrading its Instinct platform with the MI325X in October which will feature HBM3e memory and increased capacities of up to 288 GB. Some of the features of the MI325X include:

NVIDIA's answer will arrive next year in the form of Blackwell Ultra with 288 GB HBM3e so AMD will once again stay ahead in this crucial AI market where larger AI models are coming out and require bigger memory capacities to support the billions or trillions of partameters.

What's Your Reaction?