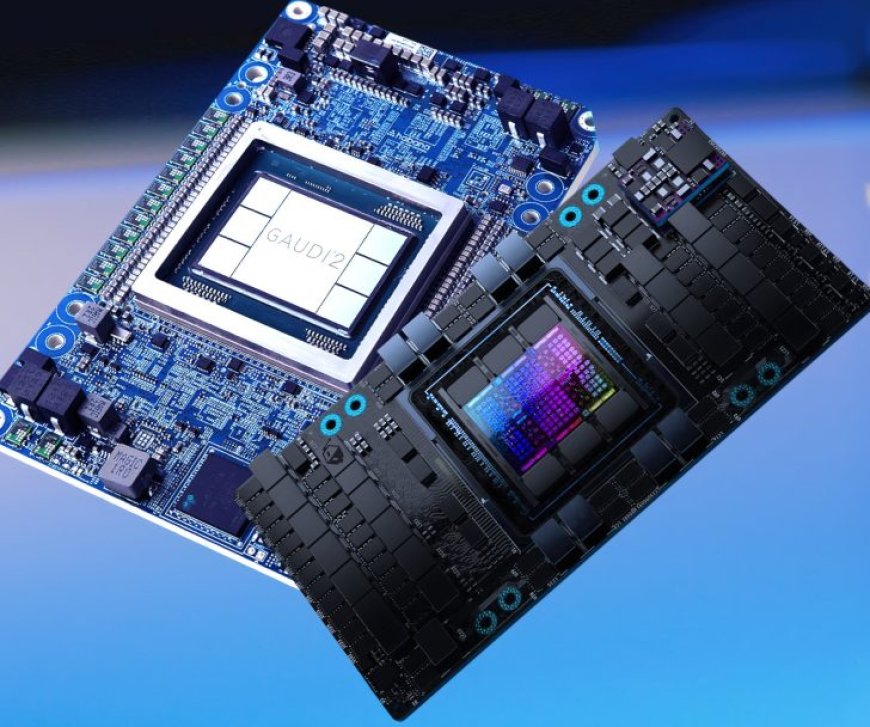

Intel Gaudi 2 Accelerator Up To 55% Faster Than NVIDIA H100 In Stable Diffusion, 3x Faster Than A100 In AI Benchmark Showdown

Intel Gaudi 2 Accelerator Up To 55% Faster Than NVIDIA H100 In Stable Diffusion, 3x Faster Than A100 In AI Benchmark Showdown

Stability AI has published a new blog post that offers an AI benchmark showdown between Intel Gaudi 2 & NVIDIA's H100 and A100 GPU accelerators. The benchmarks show that Intel's solutions offer great value and can be seen as a respected alternative for customers who are eyeing a fast & readily available solution compared to NVIDIA's offerings.

The AI firm, Stability AI, has been making open models that can handle a diverse range of tasks efficiently. To test this out, Stability AI used two of their models which include Stable Diffusion 3, and did a benchmarking run between the most popular AI Accelerators from NVIDIA and Intel to see how they perform against each other.

In Stability Diffusion 3, the next chapter in the highly popular text-to-image model, Intel's Gaudi 2 AI accelerator delivered some exceptional results. The model ranges from 800M to 8B parameters & it was tested using the 2B parameter version. For comparison, 2 nodes featuring a total of 16 Intel & NVIDIA accelerators were used with a batch size set to 16 per accelerator and a batch size of up to 512. The end result was the Intel Gaudi 2 offering a 56% speedup versus the H100 80GB GPU and a 2.43x speedup versus the A100 80 GB GPU.

The 96 GB HBM capacity also allowed Intel's Gaudi 2 to fit in a batch size of 32 per accelerator for a total batch size of 512. This enabled a further speed of 1,254 images per second, a speed-up of 35% over the 16 Batch Gaudi 2 accelerator, 2.10x over the H100 80GB, and 3.26x over the A100 80 GB AI GPUs.

Further scaling up to 32 nodes (256 accelerators) for both the Gaudi 2 and A100 80 GB GPUs, you see an increase of 3.16x on the Intel solution which can output 49.4 images / second / device versus just 15.6 on the A100 solution.

While training performance is superb on the Gaudi 2 AI accelerators, it looks like NVIDIA still retains hold of the throne in inferencing thanks to its Tensor-RT optimizations which have made huge progress throughout the previous year and the green team is continuously making great strides in this ecosystem. The A100 GPUs are said to produce images up to 40% faster in these particular workloads under the same Stable Diffusion 3 8B model versus the Gaudi 2 accelerators.

On inference tests with the Stable Diffusion 3 8B parameter model the Gaudi 2 chips offer inference speed similar to Nvidia A100 chips using base PyTorch. However, with TensorRT optimization, the A100 chips produce images 40% faster than Gaudi 2. We anticipate that with further optimization, Gaudi 2 will soon outperform A100s on this model. In earlier tests on our SDXL model with base PyTorch, Gaudi 2 generates a 1024x1024 image in 30 steps in 3.2 seconds, versus 3.6 seconds for PyTorch on A100s and 2.7 seconds for a generation with TensorRT on an A100.

The higher memory and fast interconnect of Gaudi 2, plus other design considerations, make it competitive to run the Diffusion Transformer architecture that underpins this next generation of media models.

via Stability AI

Lastly, we have results in the second model which is Stable Beluga 2.5 70B, a fine-tuned version of LLaMA 2 70B. With no extra optimizations and running under PyTorch, the 256 Intel Gaudi 2 AI accelerators achieved an average throughput of 116,777 tokens/seconds. It was about 28% faster than the A100 80GB solution running under TensorRT.

All of this goes off to show just how competitive the AI landscape is becoming and it's not the hardware that matters the most, it's the software and optimizations for each specific accelerator. While hardware is essential, you can have the latest and greatest but if there's no solid foundation to drive all of those cores, memory, and various AI-specific accelerators, then you will have a tough time in this space.

NVIDIA has known this for a long time which is why Intel and AMD have just started solidifying their software suites for AI and whether they will play catch up with the green giant or be able to tackle the CUDA/Tensor architecture with swift software releases remains to be seen. These benchmarks show that Intel is becoming a very viable solution, not only as an alternative but as a competitive solution against NVIDIA's offerings, and with future Gaudi and AI GPU offerings, we can expect a more robust AI segment with great solutions for customers to select from rather than relying on one sole company.

What's Your Reaction?