AMD To Ship Huge Quantities Of Instinct MI300X Accelerators, Capturing 7% of AI Market

AMD To Ship Huge Quantities Of Instinct MI300X Accelerators, Capturing 7% of AI Market

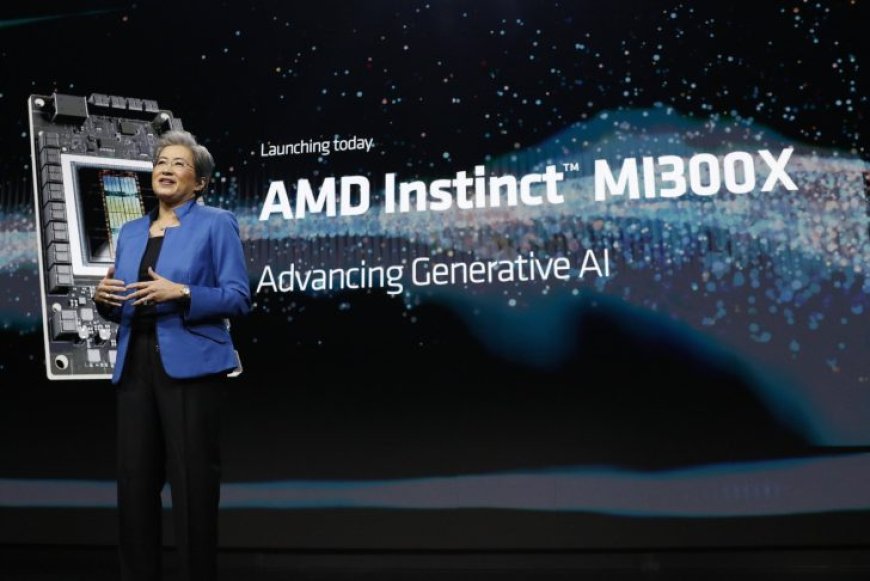

AMD's "gold rush" time in the AI markets might finally come, as industry reports that the firm faces massive demand for its cutting-edge MI300X AI accelerators.

When we look at the course of events in the AI markets, AMD's presence was nowhere to be seen, and everyone has been "cheering" for NVIDIA since the beginning of 2023. However, Team Red's efforts to grasp the market's attention might finally play out, as the firm is reported to ship vast quantities of the MI300X AI accelerators, with expectations that it would alone secure a 7% supply of the AI markets, setting a considerable milestone for the firm.

Now, you might ask what has caused this sudden inclination towards AMD's flagship Instinct accelerator. Well, the answer is simple. NVIDIA's supply chain has been exhausted to a much greater extent, as the firm has been involved in manufacturing and delivering large volumes of its AI products.

This has caused a massive order backlog, resulting in delays for tech firms that desire the computing performance at its quickest. While the company is trying to address this as much as possible, working with firms like TSMC and Synopsys who are utilizing the new CuLitho technology, it looks like there is still big enough demand, and one of the biggest factors is the cost which with the likes of Blackwell GPUs which were announced earlier today isn't expected to come down at all.

On the other hand, AMD pledges a much more robust supply and a better price-to-performance ratio, which is why the MI300X has gained immense popularity and has been a top priority for professionals in the field. Here is how the performance of the AMD MI300X sums up compared to NVIDIA's H100:

AMD's AI ventures are expected to gain traction as firms like Microsoft, Meta, and others are testing out Team Red's AI options. The company is expected to ship large quantities of the MI300X soon, which could tip the balances in favor of AMD, given that they successfully provide the performance they have pledged. Exciting times are ahead for those involved in the AI race.

News Source: MyDrivers

What's Your Reaction?