AMD Launches ROCm 6.1.3 Open AI Software Alongside Radeon PRO W7900 Dual Slot GPU

AMD Launches ROCm 6.1.3 Open AI Software Alongside Radeon PRO W7900 Dual Slot GPU

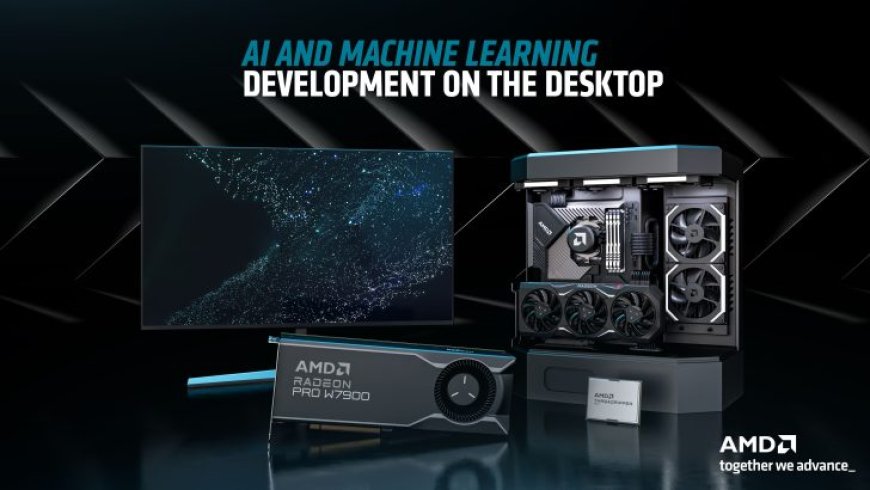

AMD has officially launched its new ROCm 6.1.3 Open software suite with added AI capabilities & support alongside the Radeon PRO W7900 Dual Slot GPU.

Announced at Computex 2024, AMD is formally launching its Radeon PRO W7900 Dual Slot GPU in the retail segment. Priced at $3499 US, the Radeon PRO W7900 is said to offer leadership performance-per-dollar and a vast 48 GB memory pool, enabling faster performance within AI-oriented workloads such as LLMs (Large Language Models).

Coming straight to the specifications, the AMD Radeon PRO W7900 graphics card comes in a dual-slot design, making it a perfect solution for workstation setups which can house up to four of these AI-ready behemoths. Internally, the graphics card features the Navi 31 XTX GPU core with 6144 cores packed within 96 compute units. The GPU is equipped with a 384-bit wide bus & comes loaded with 48 GB of GDDR6 memory, delivering up to 864 GB/s of bandwidth. The card also packs 96 MB of Infinity Cache to take things up a notch further, providing even more bandwidth to this high-end GPU.

Following are some of the main highlights of the graphics card:

Besides the Radeon PRO W7900 Dual Slot GPU launch, AMD is also launching its ROCm 6.1.3 open software suite for public release. The new ROCm software brings enhanced accessibility & widened support for consumer-tier graphics cards under the Radeon and Radeon PRO series. The release highlights include:

AMD states that ROCm 6.1.3 supports up to four qualified Radeon RX and Radeon PRO GPUs (Radeon RX 7900 XTX, 7900 XT, 7900 GRE, Radeon PRO W7900, PRO W7900 DS, PRO W7800). A combination of four of any of these GPUs can now be plugged directly into workstations and customers can harness expanded performance, scalability, and accessibility capabilities. Each GPU can be made to compute inference independently and output the response. The ROCm 6.1.3 software stack also adds support for Windows Subsystem for Linux, also known as WSL 2, in BETA.

More ML performance for your desktop

via AMD

With up to 48 GB memory pool available per GPU, you can get a combined pool of up to 192 GB memory capacities which will be accessible and usable for your AI needs.

While PyTorch and ONNX remain the go-to choice, ROCm 6.1.3 also adds qualification for Tensorflow framework, giving users an added choice for AI development.

What's Your Reaction?