AMD Instinct MI250 Sees Boosted AI Performance With PyTorch 2.0 & ROCm 5.4, Closes In On NVIDIA GPUs In LLMs

AMD Instinct MI250 Sees Boosted AI Performance With PyTorch 2.0 & ROCm 5.4, Closes In On NVIDIA GPUs In LLMs

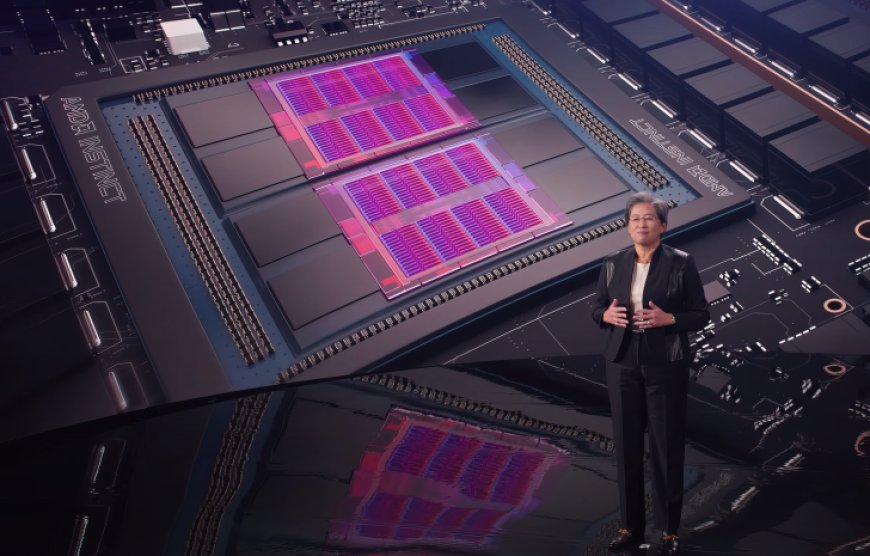

AMD Instinct GPUs such as the MI250 have received a major boost in AI performance, bringing them closer to NVIDIA's chips.

In a blog post by MosaicML, the software vendor has shown how PyTorch 2.0 and ROCM 5.4 help increase the performance of AMD Data Center GPUs such as the Instinct series without any code changes. The software vendor offers enhanced support for ML and LLM training on a vast range of solutions from NVIDIA and AMD supporting 16-bit precision (FP16 / BF16). The recent releases have allowed MosaicML to unless even better performance out of the AMD Instinct accelerators with the use of their LLM Foundry Stack.

The highlights of the results were as follows:

While AMD's Instinct MI250 GPU offered a slight edge over the NVIDIA A100 GPUs in terms of FP16 FLOPs (without sparsity), memory capacity, and memory bandwidth, it should be noted that MI250 can only scale up to 4 accelerators whereas NVIDIA A100 GPUs can scale up to 8 GPUs on a singular system.

Taking a deeper look, both the AMD and NVIDIA hardware was able to launch AI training workloads with LLM foundry with ease. Performance was evaluated in two training workloads, first was overall throughput (Tokens/Sec/GPU) and the other was the overall performance (TFLOP/Sec/GPU).

The AI Training Throughput was done on a range of models from 1 Billion to 13 Billion parameters. Testing showed that the AMD Instinct MI250 delivered 80% of the performance of NVIDIA's A100 40GB and 73% performance of the 80GB variant. NVIDIA did retain its leadership position in all of the benchmarks but it should be mentioned that they also had twice as many GPUs running in the tests. Furthermore, it is mentioned that further improvements on the training side are expected for AMD Instinct accelerators in the future.

AMD is already cooking up its next-gen Instinct MI300 accelerators for HPC and AI workloads. The company demonstrated how the chip handled an LLM model with 40 Billion parameters on a single solution. The MI300 will also scale in up to 8 GPU and APU configurations. The chip will compete against NVIDIA's H100 and whatever the green team has been working on for release in the coming year. The MI300 will offer the highest memory capacity on any GPU with 192 GB HBM3 & at a much higher bandwidth than NVIDIA's solution. It will be interesting to see if these software advances on the AMD front will be enough to capture the 90%+ market share which NVIDIA has acquired within the AI space.

What's Your Reaction?