AMD Instinct AI Accelerator Lineup Gets MI325X Refresh In Q4, 3nm MI350 “CDNA 4” In 2025, CDNA MI400 “CDNA Next” In 2026

AMD Instinct AI Accelerator Lineup Gets MI325X Refresh In Q4, 3nm MI350 “CDNA 4” In 2025, CDNA MI400 “CDNA Next” In 2026

AMD has announced its brand new AI Accelerators including the Instinct MI325X "CDNA 3", MI350X "CDNA 4" & MI400 "CDNA Next" for data centers and the cloud.

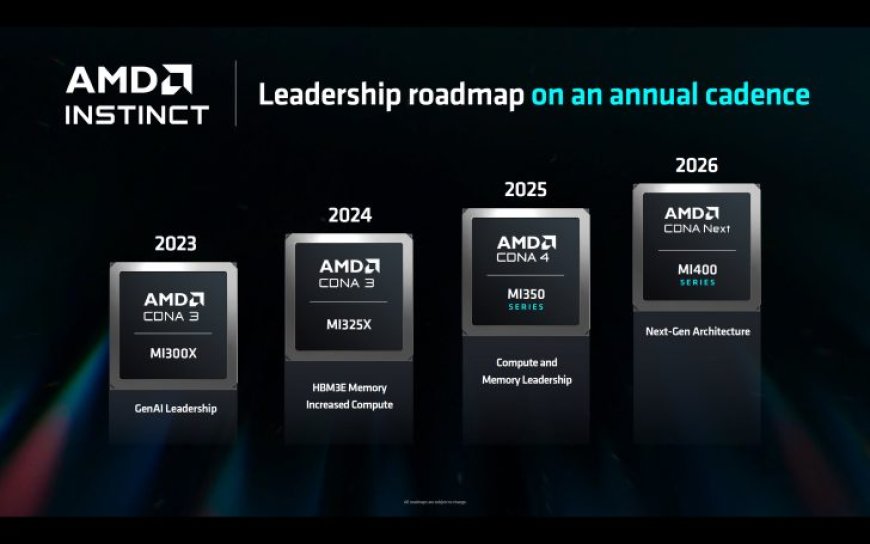

It looks like AMD is accelerating its AI Instinct accelerator roadmap quite aggressively following the recent and similar announcements by NVIDIA during their keynote. The company now plans to offer a new AI accelerator, either a refresh or a completely new product, each respective year.

Starting with the first product, we have the AMD Instinct MI325X AI accelerator which will be using the same CDNA 3 architecture as the existing MI300 series. This accelerator will feature 288 GB of HBM3E memory, 6 TB/s memory bandwidth, 1.3 PFLOPs of FP16, and 2.6 PFLOPs of FP8 compute performance and will be able to handle up to 1 trillion parameters per server. Versus the NVIDIA H200, the AI accelerator will offer:

The AMD Instinct MI325X AI accelerator can be seen as a beefed-up refresh of the MI300X series and it is being previewed today at Computex 2024 with a launch planned for Q4 2024. It also uses the same chiplet housing structure as the existing MI300X series but we can expect 12-Hi HBM3E sites, allowing for increased capacities.

But AMD is also looking into the future and announced its next-gen Instinct MI350 series which will be available in 2025. AMD states that the Instinct MI350 series will be based on a 3nm process node, also offer up to 288 GB HBM3E memory, and support FP4/FP6 data types which are also supported by NVIDIA's Blackwell GPUs. These chips will be based on the next-gen CDNA 4 architecture and will be arriving with OAM compatibility.

Lastly, AMD shares an update on its Instinct AI roadmap which now goes for an annual cadence as we mentioned above. In 2026, AMD is planning to introduce its next-gen Instinct MI400 series based on the next-gen CDNA architecture simply called "CDNA Next".

In terms of performance, the Instinct CDNA 3 architecture is expected to bring an 8x increase over CDNA 2 while the CDNA 4 architecture is expected to offer around a 35x increase over CDNA 3GPUs. AMD Is also sharing some comparison figures against NVIDIA's Blackwell B200 GPUs. The MI350 series is expected to offer 50% more memory and 20% more compute TFLOPs than the B200 offering. NVIDIA also announced its Blackwell Ultra GPU for 2025 which should push things further so it's going to be a heated battleground within the high-end AI accelerator segment.

Lastly, AMD reiterates the recent UALink (Ultra Accelerator Link) announcement from last week which is a new high-performance, open and salable AI interconnect infrastructure that is being worked upon by several vendors including Microsoft, Intel, CISCO, Broadcom, META, HPE & more. There's also the Ultra Ethernet Consortium which is being referred to as the answer for scale out AI infrastructure.

With that said, AMD looks to have a very solid foundational roadmap for its AI endeavors as it competes against the might of NVIDIA. AMD is also calling MI300 its fastest ramping product in history with several partners and vendors currently offering them in their servers.

What's Your Reaction?