Survey Reveals AI Professionals Considering Switching From NVIDIA To AMD, Cite Instinct MI300X Performance & Cost

Survey Reveals AI Professionals Considering Switching From NVIDIA To AMD, Cite Instinct MI300X Performance & Cost

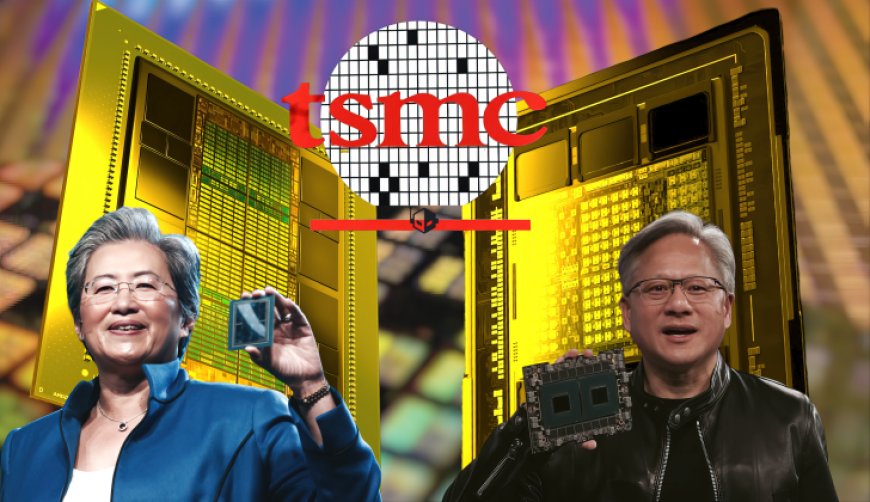

A recently conducted survey has revealed that a large chunk of AI professionals are looking to switch from NVIDIA to AMD Instinct MI300X GPUs.

Jeff Tatarchuk, who is the co-founder of TensorWave, revealed that in an independent study of 82 engineers and AI professionals, around 50% of them expressed their confidence in utilizing AMD Instinct MI300X GPU, considering that it offers a better price-to-performance ratio, along with having extensive availability compared to counterparts such as NVIDIA H100s. Apart from that, Jeff also says that TensorWave will employ the MI300X AI accelerators, which is another promising news for Team Red, considering that their Instinct lineup, in general, hasn't seen the adoption level compared to others like NVIDIA.

In a survey conducted recently among 82 engineers and AI professionals, it stated 50% of the respondents are actively considering using the AMD Instinct MI300X GPU.

The top three reasons for considering the AMD MI300X are availability, cost, and performance, in that order.

We… pic.twitter.com/ZckHBj6b20

— Jeff Tatarchuk (@jtatarchuk) March 8, 2024

For a quick rundown on the MI300X Instinct AI GPU, it is designed solely on the CDNA 3 architecture, and a lot of stuff is going on. It hosts a mix of 5nm and 6nm IPs, combining to deliver up to 153 Billion transistors (MI300X). Memory is another area where a huge upgrade has been witnessed, with the MI300X boasting 50% more HBM3 capacity than its predecessor, the MI250X (128 GB). Here is how it compares to NVIDIA's H100:

We recently reported how AMD's flagship Instinct AI accelerator has been causing "headaches" for market competitors. This is because not only are the performance gains with the MI300X huge, but AMD has timed its release perfectly, as NVIDIA is currently stuck with the "weight" of order backlogs, which has hindered its growth in gaining new clients. While Team Red didn't get the start they wanted to have, it seems like the upcoming period could prove to be fruitful for the firm, potentially coming head-to-head with market competitors.

News Source: Jeff Tatarchuk

What's Your Reaction?