Samsung’s Shinebolt, Flamebolt & Snowbolt Trademarks Hint At Next-Gen HBM DRAM For HPC

Samsung’s Shinebolt, Flamebolt & Snowbolt Trademarks Hint At Next-Gen HBM DRAM For HPC

Samsung has trademarked a bunch of codenames including Shinebolt, Flamebolt & Snowbolt which are related to its next-gen HBM products.

Earlier this month, it was reported that Samsung Electronics would develop a new HBM3P memory with the codename "Snowbolt." This recent HBM3P memory will offer 5 TB/s bandwidth speeds per stack. Yesterday, it was reported that the company filed two more trademarks for DRAM memory, focusing on artificial intelligence geared toward large-scale computers.

The trademark application adds two more codenames to Samsung Electronics' naming structure, adding "Shinebolt" and "Flamebolt" to previous monikers Flarebolt, Aquabolt, Flashbolt, & Icebolt. The application was submitted to the Korea Intellectual Property Rights Information Service or KIPRIS.

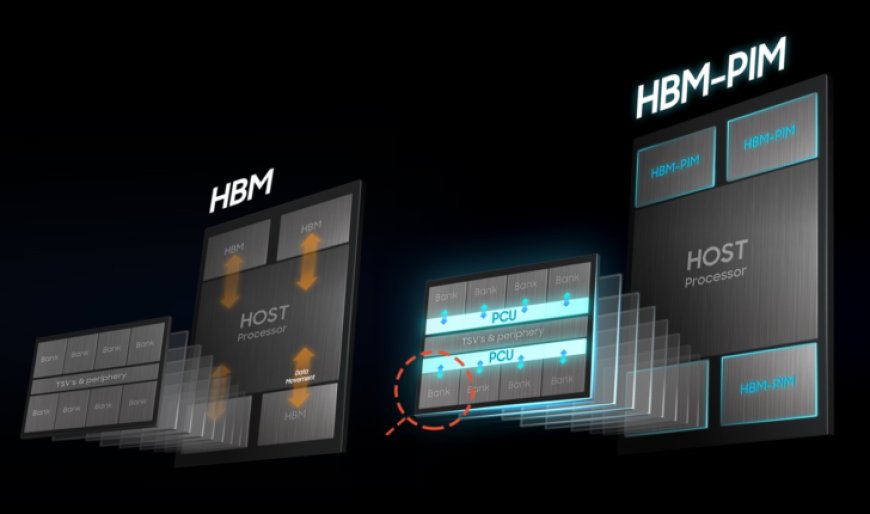

Website SamMobile mentions the trademarks are linked to products considered "DRAM modules with high bandwidth for use in high-performance computing equipment, artificial intelligence, and supercomputing equipment and DRAM with high bandwidth for use in graphic cards."

Samsung SDS president Kye Hyung Kyung recently spoke at KAIST, where he discussed how the company anticipates that future memory products will drive the development of artificial intelligence on a larger scale, including the development of servers.

During a recent conference call to investors and the media last month, a spokesperson for Samsung Electronics was quoted stating,

We have already supplied HBM2 and HBM2E products to major customers to provide the highest performance and highest capability products promptly that meet the needs and technology trends of the AI market, and HBM3 (16 GB and 12 GB). However, 24 GB products are also being sampled, and preparations for mass production have already been completed.

Not only the current HBM3 but also the next-generation HBM3P product with higher performance and capacity required by the market is being prepared for the second half of the year with the industry's best performance.

via Samsung

The Samsung SDS president continued to discuss how AI performance will rely more on robust memory options that will perform higher than NVIDIA's most widely used AI GPUs. The executive stresses that this mindset will be a reality as early as 2028.

Other Samsung Electronics HBM branch series were:

The recently announced Snowbolt is expected to coincide with the release of the high-bandwidth DRAM modules found in HPC cloud systems, supercomputers, and in use for artificial intelligence.

News Source: SamMobile

What's Your Reaction?