NVIDIA Shares Blackwell GPU Compute Stats: 30% More FP64 Than Hopper, 30x Faster In Simulation & Science, 18X Faster Than CPUs

NVIDIA Shares Blackwell GPU Compute Stats: 30% More FP64 Than Hopper, 30x Faster In Simulation & Science, 18X Faster Than CPUs

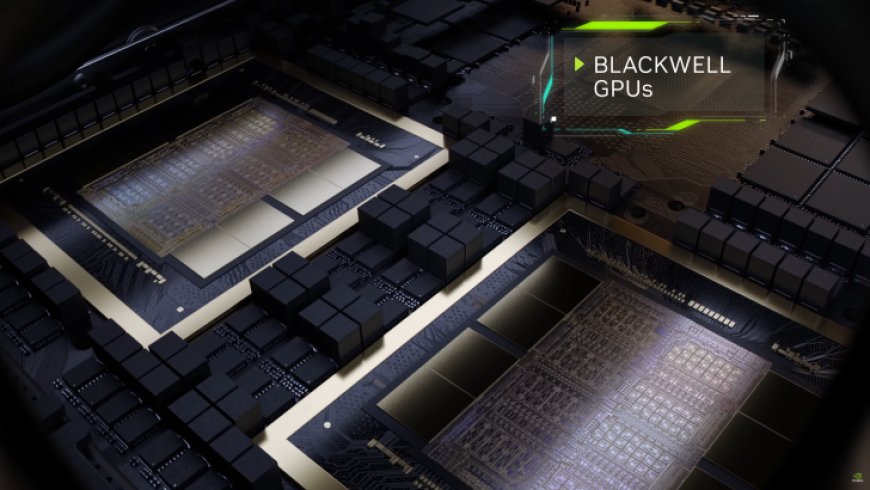

NVIDIA has shared more performance statistics of its next-gen Blackwell GPU architecture which has taken the industry by storm. The company shared several metrics including its science, AI, & simulation results versus the outgoing Hopper chips and competing x86 CPUs when using Grace-powered Superchip modules.

In a new blog post, NVIDIA has shared how Blackwell GPUs are going to add more performance to the research segment which includes Quantum Computing, Drug Discovery, Fusion Energy, Physics-based simulations, scientific computing, & more. When the architecture was originally announced at GTC 2024, the company showcased some big numbers but we have yet to get a proper look at the architecture itself. While we wait for that, the company has more figures for us to consume.

Starting with the details, one of NVIDIA's biggest aims with its Blackwell GPU architecture is to reduce cost and energy requirements. NVIDIA states that the Blackwell platform can simulate weather patterns at 200x lower cost and 300x less energy while running digital twin simulations encompassing the entire planet can be done with 65x cost and 58x energy reductions.

NVIDIA also sheds light on the double-precision of FP64 (Floating Point) capabilities of its Blackwell GPUs which are rated at 30% more TFLOPs than Hopper. A single Hopper H100 GPU offers around 34 TFLOPs of FP64 compute and a single Blackwell B100 GPU offers around 45 TFLOPs of compute performance. Blackwell mostly comes in the GB200 Superchip which includes two GPUs along with the Grace CPU so that's around 90 TFLOPs of FP64 compute capabilities. A single chip is behind the AMD MI300X and MI300A Instinct accelerators which offer 81.7 & 61.3 TFLOPs of FP64 capabilities on a single chip.

While NVIDIA's Blackwell GPUs took a step back in the traditional dense floating point performance, that alone shouldn't undermine its computing capabilities. The company first shows off Simulation performance in the Cadence SpectreX simulation which runs 13x faster on Blackwell GB200 & 22x gains in CFD (Computational Fluid Dynamics) versus ASICs and traditional CPUs. The chip is also a multitude faster than A100 and Grace Hopper (GH200) systems.

NVIDIA quickly shifts gears and brings us AI performance once again where its Blackwell GB200 GPU platform once again reigns supreme with a 30x gain over H100 in GPT (1.8 Trillion Parameter). The GB200 NVL72 platform enables up to 30x higher throughput while achieving 25x higher energy efficiency and 25x lower TCO (Total Cost of Operation). Even putting the GB200 NVL72 system against 72 x86 CPUs yields an 18x gain for the Blackwell system and a 3.27x gain over the GH200 NVL72 system in Database Join Query.

With all the talk surrounding the Blackwell GPUs, one should expect that everyone is going to forget about Hopper but that isn't the case at all. The NVIDIA Grace Hopper GH200 superchip GPU platform continues to be the undisputed king of the AI segment at the moment and currently powers nine different supercomputers across the planet with a combined computing capability of 200 Exaflops, achieving 200 quintillion calculations per second of AI performance.

New Grace Hopper-based supercomputers coming online include EXA1-HE, in France, from CEA and Eviden; Helios at Academic Computer Centre Cyfronet, in Poland, from Hewlett Packard Enterprise (HPE); Alps at the Swiss National Supercomputing Centre, from HPE; JUPITER at the Jülich Supercomputing Centre, in Germany; DeltaAI at the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign; and Miyabi at Japan’s Joint Center for Advanced High Performance Computing — established between the Center for Computational Sciences at the University of Tsukuba and the Information Technology Center at the University of Tokyo.

NVIDIA

NVIDIA's GPUs are the product of choice right now for the increasing AI demand and there looks to be no stop to that. Analysts have pointed out NVIDIA to be the dominant force throughout 2024 & as soon as Blackwell becomes available to customers, we can expect it to usher in record levels of performance in the AI segment and in NVIDIA's own revenue stream. But NVIDIA isn't stopping any time soon as the company is anticipated to start production of its next-gen Rubin R100 GPUs by as early as late 2025 and the initial details sound insane.

What's Your Reaction?