NVIDIA Says It Spends 20% Time on Hardware & 80% Time on Software As First DGX H100 Systems Roll Out

NVIDIA Says It Spends 20% Time on Hardware & 80% Time on Software As First DGX H100 Systems Roll Out

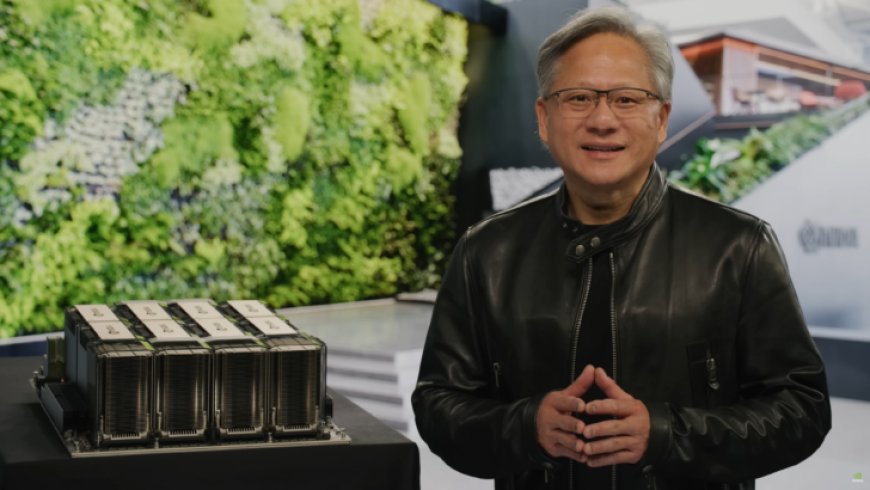

Today, NVIDIA announced that its first DGX H100 systems are shipping to customers all over the globe to advance AI workloads.

Announced back at GTX 2022, the NVIDIA Hopper H100 was titled the world's first and fastest 4nm Data Center chip, delivering up to 4000 TFLOPs of AI compute power. Featuring a brand new GPU architecture with 80 billion transistors, blazing-fast HBM3 memory, and NVLINK capabilities, this chip was specifically targeted at various workloads including Artificial Intelligence (AI), Machine Learning (ML), Deep Neural Networking (DNN) and various HPC focused compute workloads.

Now, the first DGX H100 Hopper systems are shipping to customers from Tokyo to Stockholm says NVIDIA. In its presser, the company states that the Hopper H100 GPUs were born to run generative AI that has taken the world by storm with tools such as ChatGPT (GPT-4). CEO, Jensen Huang has already called ChatGPT as one of the greatest things ever done for computing and said that it's the iPhone moment of AI. The company has seen major demand for its AI GPUs resulting in its stock skyrocketing.

NVIDIA is leading the charge in powering some of the world's top data center clients responsible for powering these generative tools. Some companies are listed below:

Boston Dynamics AI Institute (The AI Institute), a research organization which traces its roots to Boston Dynamics, the well-known pioneer in robotics, will use a DGX H100 to pursue that vision. Researchers imagine dexterous mobile robots helping people in factories, warehouses, disaster sites, and eventually homes.

Scissero has offices in London and New York, and employs a GPT-powered chatbot to make legal processes more efficient. Its Scissero GPT can draft legal documents, generate reports and conduct legal research.

In Germany, DeepL will use several DGX H100 systems to expand services like translation between dozens of languages it provides for customers, including Nikkei, Japan’s largest publishing company. DeepL recently released an AI writing assistant called DeepL Write.

In Tokyo, DGX H100s will run simulations and AI to speed the drug discovery process as part of the Tokyo-1 supercomputer. Xeureka — a startup launched in November 2021 by Mitsui & Co. Ltd., one of Japan’s largest conglomerates — will manage the system.

viia NVIDIA

But that's not all. You see, NVIDIA has slowly been transitioning from a hardware company to a software company and provider. Making hardware such as GPUs and HPC systems is one thing however driving them is a more crucial task. For this purpose, NVIDIA has a vast software stack which is designed to squeeze every bit of juice out of its GPUs. Software suites such as NVIDIA's Base Command and AI Enterprise and the entire CUDA framework are just building blocks of the entire NVIDIA software stack available to its customers and partners.

"At NVIDIA, we spend 20% of our time on the hardware and 80% of our time on the software.

We are continually working on the algorithms, to understand what our hardware can and cannot do. AI is a full-stack problem."

- Manuvir Das, Head of Enterprise Computing$NVDA… pic.twitter.com/zFM4XNbjVJ

— Simon Erickson (@7Innovator) May 1, 2023

NVIDIA's Software stack has evolved to such an extent over the years that NVIDIA's Head of Enterprise Computing, Manuvir Das, said during the Future Compute event that NVIDIA now spends 20% of its time on hardware and 80% of its time on software. He also said that the company is continually working on algorithms that help them understand what their hardware can and cannot do.

The GPU giant and chipmaker are also going to leverage its own AI prowess to develop the next-gen GPU. With the help of Machine Learning, NVIDIA will be capable of making chips "Better Than Humans" and along with cuLitho, the same chips will be designed and manufactured 40x faster than traditional designs. NVIDIA is working with ASML and TSMC to help with the adoption of this technology.

In recent benchmarks that were published by MLPerf, NVIDIA's GPUs including Hopper H100, saw a drastic improvement in performance in AI tasks such as inferencing and deep learning. This is once again achieved thanks to continued software stack updates & innovation which continues to grow.

What's Your Reaction?