NVIDIA Next-Gen B100 “Blackwell” AI GPUs Enter Supply Chain Certification Stage With Foxconn & Wistron In Race

NVIDIA Next-Gen B100 “Blackwell” AI GPUs Enter Supply Chain Certification Stage With Foxconn & Wistron In Race

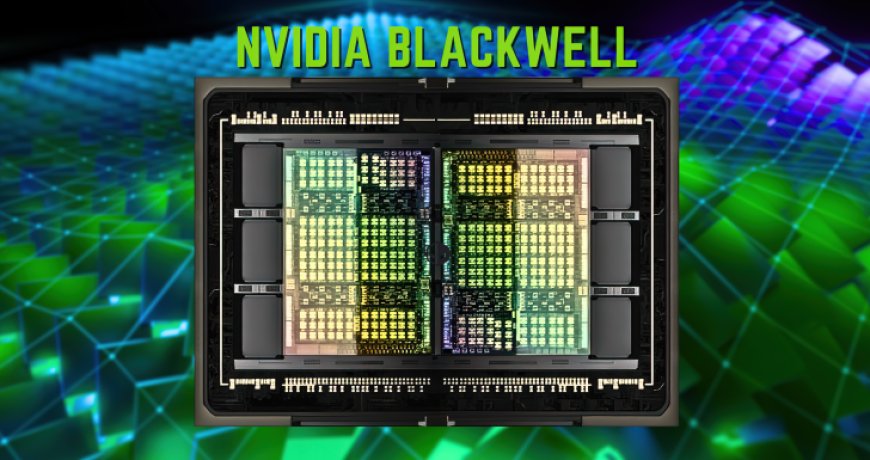

NVIDIA's Blackwell B100 GPUs have reportedly entered the supply chain certification stage which is another step in the development of the next-gen AI powerhouse.

According to Chinese and Taiwanese outlets, UDN & CTEE, it is being reported that NVIDIA has now entered the Supply Chain Certification stage for its upcoming Blackwell B100 AI GPUs. The GPU will succeed the Hopper H100 chip and deliver a major leap in compute performance & AI capabilities.

To realize swift manufacturing and supply during launch, NVIDIA is now selecting its supply chain partners reports suggest that Wistron has been selected as the primary partner who will supply substrates for the production of the Blackwell GPUs. Both Wistron and Foxconn were in the race to secure these orders however reservations with yields and other factors led to Wistron winning all of the early-stage orders.

NVIDIA's GB200 (B100) AI server is scheduled to be launched in 2024. The supply chain has entered the certification stage. There are rumors in the market that Hon Hai originally planned to win the B100 substrate order, but recently the certification has been "blocked". Wistron maintains the Original order share.

It is rumored that Huida originally planned to include Hon Hai as the second AI-GPU server substrate supplier for the next-generation B100 series. However, due to yield rate and other considerations, Wistron will still obtain 100% of the order share, and Wistron is also taking advantage of the opportunity. The early stage orders for AI-GPU modules are reportedly successful.

via CTEE

UDN also mentions that NVIDIA will be shifting its orders of H100 and B100 GPUs to Foxconn in North America. These are specifically for the H100 & B100 modules so while the substrates are provided by Wistron, it is likely that Foxconn will be the end supplier who will be offering the fully packaged AI modules.

All orders for NVIDIA's highest-end H100 AI server modules in North America were handed over to Hon Hai, which will be produced in factories in Mexico, the United States, and Hsinchu, Taiwan, the NVIDIA B100 module order that the industry is most concerned about will also be contracted by Hon Hai in the future.

via UDN

As per the latest reports, NVIDIA has fast-forwarded the launch of its Blackwell B100 AI GPUs which are now said to launch in Q2 2024. The GPUs are said to utilize SK hynix's HBM3e memory, offering the highest bandwidth and transfer speeds in the industry. Besides this, the new GPUs are going to retain NVIDIA's dominance within the AI segment where it already has a market share hold of 90%. Next year is going to be a heated battle with other chipmakers also picking up the pace within the AI segment such as AMD, Intel, Tenstorrent, and more.

News Source: Dan Nystedt

What's Your Reaction?