NVIDIA Blackwell Is Up & Running In Data Centers: NVLINK Upgraded To 1.4 TB/s, More GPU Details, First-Ever FP4 GenAI Image

NVIDIA Blackwell Is Up & Running In Data Centers: NVLINK Upgraded To 1.4 TB/s, More GPU Details, First-Ever FP4 GenAI Image

NVIDIA slams down Blackwell delay rumors as it moves towards sharing more info on the data center Goliath, now operational at data centers.

With Hot Chips commencing next week, NVIDIA is giving us a heads-up on what to expect during the various sessions that they have planned during the event.

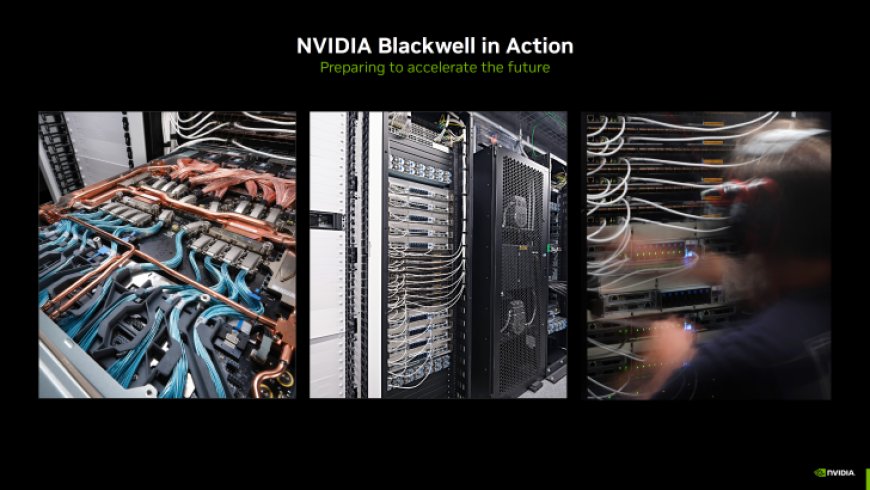

Given that there has been a recent surge in rumors regarding a delay in Blackwell's roll-out, the company kicked off a press session by showing Blackwell up and running in one of its data centers and as the company has already stated previously, Blackwell is on track for ramp and will be shipping to customers later this year. So there is not a whole lot of weight to anyone saying that Blackwell has some sort of defect or issue & that it won't make it to markets this year.

But Blackwell isn't just one chip, it's a platform. Just like Hopper, Blackwell encompasses a vast array of designs for data center, cloud, and AI customers, & each Blackwell product is comprised of various chips. These include:

NVIDIA is also sharing brand new pictures of various trays featured in the Blackwell lineup. These are the first pictures of Blackwell trays being shared and show the amount of engineering expertise that goes into designing the next-generation Data Center platforms.

The Blackwell generation is designed to tackle modern AI needs and to offer great performance in large language models such as the 405B Llama-3.1 from Meta. As LLMs grow in size with larger parameter sizes, data centers will require more compute and lower latency. Now you can make a large GPU with loads of memory and put the entire model on that chip but multiple GPUs are the requirement for achieving lower latency in token generation.

The Multi-GPU inference approach splits the calculations across multiple GPUs for low latency and high throughput but going the multi-GPU route has its complications. Each GPU in a multi-GPU environment will have to send results of calculations to every other GPU at each layer and this brings the need for high-bandwidth GPU-to-GPU communication.

NVIDIA's solution is already available for multi-GPU instances in the form of the NVSwitch. Hopper NVLINK switches offer up to 1.5x higher inference throughput compared to a traditional GPU-to-GPU approach thanks to its 900 GB/s interconnect (fabric) bandwidth. Instead of having to make several HOPS going from one GPU to the other, the NVLINK Switch makes it so that the GPU only needs to make 1 HOP to the NVSwitch and the other HOP directly to the secondary GPU.

Talking about the GPU itself, NVIDIA shared a few speeds and feeds on the Blackwell GPU itself which are as follows:

And some of the advantages of building a reticle limit chip include:

With Blackwell, NVIDIA is introducing an even faster NVLINK Switch which doubles the fabric bandwidth to 1.8 TB/s. The NVLINK Switch itself is an 800mm2 die based on TSMC's 4NP node & extends NVLINK to 72 GPUs in the GB200 NVL72 racks. The chip provides 7.2 TB/s of full all-to-all bidirectional bandwidth over 72 ports and has an in-network compute capability of 3.6 TFLOPs. The NVLINK Switch Tray comes with two of these switches, offering up to 14.4 TB/s of total bandwidth.

One of the tutorials planned by NVIDIA for Hot Chips is titled "Liquid Cooling Boosts Performance and Efficiency". These new liquid-cooling solutions will be adopted by GB200, Grace Blackwell GB200, and B200 systems.

One of the liquid-cooling approaches to be discussed is the use of warm water direct-to-chip which offers improved cooling efficiency, lower operation cost, extended IT server life, and heat reuse possibility. Since these are not traditional chillers that require power to cool down the liquid, the war water approach can deliver up to a 28% reduction in data center facility power costs.

NVIDIA is also sharing the world's first Generative AI image made using FP4 compute. The FP4-quantized model is shown to produce a 4-bit bunny image that is very similar to FP16 models at much faster speeds. This image was produced by MLPerf using Blackwell in Stable Diffusion. Now the challenge with reducing precision (going from FP16 to FP4) is that some accuracy is lost.

There are some variances in the orientation of the bunny but mostly, the accuracy is preserved and the image is still great in terms of quality. This utilization of FP4 precision is part of NVIDIA's Quasar Quantization system and research which is pushing reduced-precision AI compute to the next level.

NVIDIA, as mentioned previously, is leveraging AI to build chips for AI. The generative AI prowess is used to generate optimized Verilog code which is a hardware description language that describes circuits in the form of code and is used for design and verification of processors such as Blackwell. The language is also helping the speedup of next-gen chip architectures, pushing NVIDIA to deliver on its yearly cadence.

NVIDIA is expected to follow up with Blackwell Ultra GPU next year which features 288 GB of HBM3e memory, increased compute density, and more AI flops and that would be followed by Rubin / Rubin Ultra GPUs in 2026 and 2027, respectively.

What's Your Reaction?