Intel has announced validation & optimization for its Xeon, Core Ultra, Arc & Gaudi product lineups for Meta's latest Llama 3 GenAI workloads.

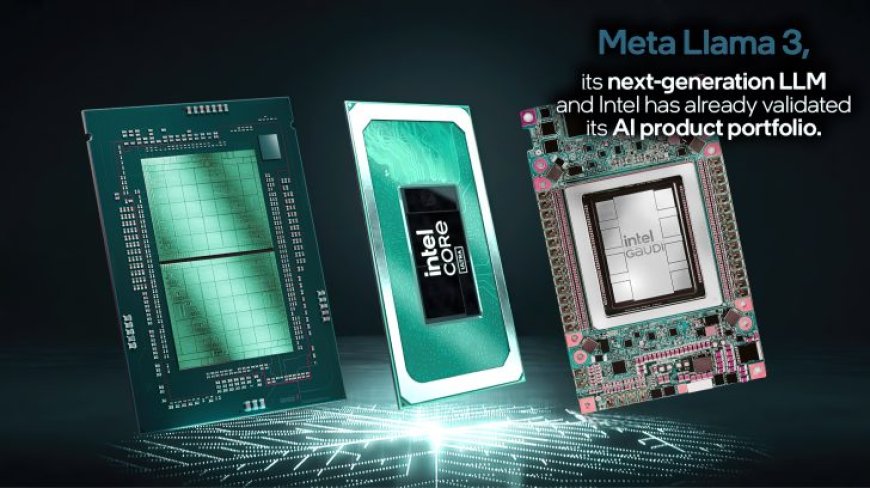

Press Release: Today, Meta launched Meta Llama 3, its next-generation large language model (LLM). Effective on launch day, Intel has validated its AI product portfolio for the first Llama 3 8B and 70B models across Gaudi accelerators, Xeon processors, Core Ultra processors, & Arc GPUs.

Press Release: Today, Meta launched Meta Llama 3, its next-generation large language model (LLM). Effective on launch day, Intel has validated its AI product portfolio for the first Llama 3 8B and 70B models across Gaudi accelerators, Xeon processors, Core Ultra processors, & Arc GPUs.

Why It Matters: As part of its mission to bring AI everywhere, Intel invests in the software and AI ecosystem to ensure that its products are ready for the latest innovations in the dynamic AI space. In the data center, Gaudi and Xeon processors with Advanced Matrix Extension (AMX) acceleration give customers options to meet dynamic and wide-ranging requirements.

Intel Core Ultra processors and Arc graphics products provide both a local development vehicle and deployment across millions of devices with support for comprehensive software frameworks and tools, including PyTorch and Intel Extension for PyTorch used for local research and development and OpenVINO toolkit for model development and inference.

About the Llama 3 Running on Intel: Intel’s initial testing and performance results for Llama 3 8B and 70B models use open source software, including PyTorch, DeepSpeed, Optimum Habana library, and Intel Extension for PyTorch to provide the latest software optimizations.

Intel Gaudi 2 accelerators have optimized performance on Llama 2 models – 7B, 13B, and 70B parameters – and now have initial performance measurements for the new Llama 3 model. With the maturity of the Gaudi software, Intel easily ran the new Llama 3 model and generated results for inference and fine-tuning. Llama 3 is also supported by the recently announced Gaudi 3 accelerator.

Intel Xeon processors address demanding end-to-end AI workloads, and Intel invests in optimizing LLM results to reduce latency. Xeon 6 processors with Performance-cores (code-named Granite Rapids) show a 2x improvement on Llama 3 8B inference latency compared with 4th Gen Xeon processors and the ability to run larger language models, like Llama 3 70B, under 100ms per generated token.

Intel Core Ultra and Arc Graphics deliver impressive performance for Llama 3. In an initial round of testing, Core Ultra processors already generate faster than typical human reading speeds. Further, the Arc A770 GPU has Xe Matrix eXtensions (XMX) AI acceleration and 16GB of dedicated memory to provide exceptional performance for LLM workloads.

Xeon Scalable Processors

Intel has been continuously optimizing LLM inference for Xeon platforms. As an example, compared to Llama 2 launch software improvements in PyTorch and Intel Extension for PyTorch have evolved to deliver 5x latency reduction. The optimization makes use of paged attention and tensor parallel to maximize the available compute utilization and memory bandwidth. Figure 1 shows the performance of Meta Llama 3 8B inference on AWS m7i.metal-48x instance, which is based on 4th Gen Xeon Scalable processor.

2 of 9

We benchmarked Meta Llama 3 on an Xeon 6 processor with Performance cores (formerly code-named Granite Rapids) to share a preview of the performance. These preview numbers demonstrate that Xeon 6 offers a 2x improvement on Llama 3 8B inference latency compared to widely available 4th Gen Xeon processors, and the ability to run larger language models, like Llama 3 70B, under 100ms per generated token on a single two-socket server.

Model

TP

Precision

Input length

Output Length

Throughput

Latency*

Batch

Meta-Llama-3-8B-Instruct

1

fp8

2k

4k

1549.27

token/sec

7.747

ms

12

Meta-Llama-3-8B-Instruct

1

bf16

1k

3k

469.11

token/sec

8.527

ms

4

Meta-Llama-3-70B-Instruct

8

fp8

2k

4k

4927.31

token/sec

56.23

ms

277

Meta-Llama-3-70B-Instruct

8

bf16

2k

2k

3574.81

token/sec

60.425

ms

216

Client Platforms

In an initial round of evaluation, the Intel Core Ultra processor already generates faster than typical human reading speeds. These results are driven by the built-in Arc GPU with 8 Xe-cores, inclusive DP4a AI acceleration, and up to 120 GB/s of system memory bandwidth. We are excited to invest in continued performance and power efficiency optimizations on Llama 3, especially as we move to our next-generation processors.

With launch day support across Core Ultra processors and Arc graphics products, the collaboration between Intel and Meta provides both a local development vehicle and deployment across millions of devices. Intel client hardware is accelerated through comprehensive software frameworks and tools, including PyTorch and Intel Extension for PyTorch used for local research and development, and OpenVINO Toolkit for model deployment and inference.

What’s Next: In the coming months, Meta expects to introduce new capabilities, additional model sizes, and enhanced performance. Intel will continue to optimize the performance of its AI products to support this new LLM.

Why It Matters: As part of its mission to bring AI everywhere, Intel invests in the software and AI ecosystem to ensure that its products are ready for the latest innovations in the dynamic AI space. In the data center, Gaudi and Xeon processors with Advanced Matrix Extension (AMX) acceleration give customers options to meet dynamic and wide-ranging requirements.

Intel Core Ultra processors and Arc graphics products provide both a local development vehicle and deployment across millions of devices with support for comprehensive software frameworks and tools, including PyTorch and Intel Extension for PyTorch used for local research and development and OpenVINO toolkit for model development and inference.

About the Llama 3 Running on Intel: Intel’s initial testing and performance results for Llama 3 8B and 70B models use open source software, including PyTorch, DeepSpeed, Optimum Habana library, and Intel Extension for PyTorch to provide the latest software optimizations.

Intel Gaudi 2 accelerators have optimized performance on Llama 2 models – 7B, 13B, and 70B parameters – and now have initial performance measurements for the new Llama 3 model. With the maturity of the Gaudi software, Intel easily ran the new Llama 3 model and generated results for inference and fine-tuning. Llama 3 is also supported by the recently announced Gaudi 3 accelerator.

Intel Xeon processors address demanding end-to-end AI workloads, and Intel invests in optimizing LLM results to reduce latency. Xeon 6 processors with Performance-cores (code-named Granite Rapids) show a 2x improvement on Llama 3 8B inference latency compared with 4th Gen Xeon processors and the ability to run larger language models, like Llama 3 70B, under 100ms per generated token.

Intel Core Ultra and Arc Graphics deliver impressive performance for Llama 3. In an initial round of testing, Core Ultra processors already generate faster than typical human reading speeds. Further, the Arc A770 GPU has Xe Matrix eXtensions (XMX) AI acceleration and 16GB of dedicated memory to provide exceptional performance for LLM workloads.

Intel has been continuously optimizing LLM inference for Xeon platforms. As an example, compared to Llama 2 launch software improvements in PyTorch and Intel Extension for PyTorch have evolved to deliver 5x latency reduction. The optimization makes use of paged attention and tensor parallel to maximize the available compute utilization and memory bandwidth. Figure 1 shows the performance of Meta Llama 3 8B inference on AWS m7i.metal-48x instance, which is based on 4th Gen Xeon Scalable processor.

We benchmarked Meta Llama 3 on an Xeon 6 processor with Performance cores (formerly code-named Granite Rapids) to share a preview of the performance. These preview numbers demonstrate that Xeon 6 offers a 2x improvement on Llama 3 8B inference latency compared to widely available 4th Gen Xeon processors, and the ability to run larger language models, like Llama 3 70B, under 100ms per generated token on a single two-socket server.

token/sec

ms

token/sec

ms

token/sec

ms

token/sec

ms

In an initial round of evaluation, the Intel Core Ultra processor already generates faster than typical human reading speeds. These results are driven by the built-in Arc GPU with 8 Xe-cores, inclusive DP4a AI acceleration, and up to 120 GB/s of system memory bandwidth. We are excited to invest in continued performance and power efficiency optimizations on Llama 3, especially as we move to our next-generation processors.

With launch day support across Core Ultra processors and Arc graphics products, the collaboration between Intel and Meta provides both a local development vehicle and deployment across millions of devices. Intel client hardware is accelerated through comprehensive software frameworks and tools, including PyTorch and Intel Extension for PyTorch used for local research and development, and OpenVINO Toolkit for model deployment and inference.

What’s Next: In the coming months, Meta expects to introduce new capabilities, additional model sizes, and enhanced performance. Intel will continue to optimize the performance of its AI products to support this new LLM.