Intel Announces Optimizations For Llama 3.1 To Boost Performance Across All Products: Gaudi, Xeon, Core & Arc Series

Intel Announces Optimizations For Llama 3.1 To Boost Performance Across All Products: Gaudi, Xeon, Core & Arc Series

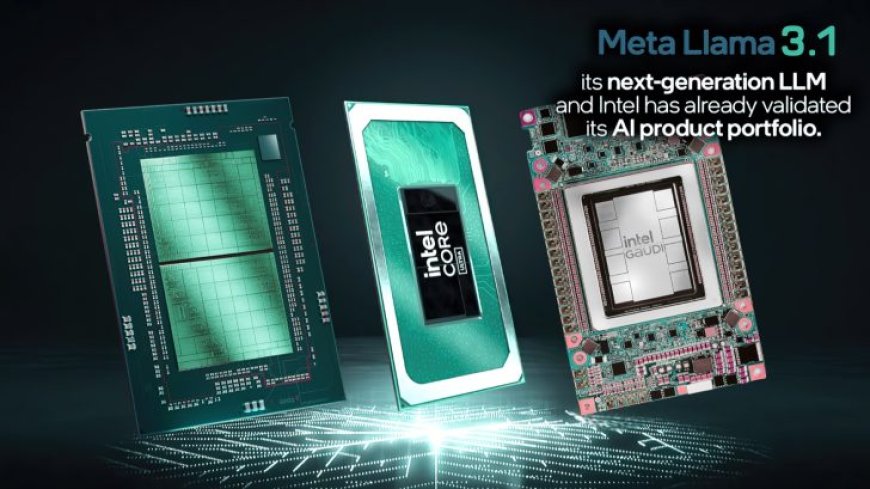

Meta's Llama 3.1 is now live & Intel has announced full support for Llama 3.1 AI models on its entire portfolio such as Gaudi, Xeon, Arc & Core.

Meta just launched its newest large language model Llama 3.1 today, taking over the Llama version 3 released in April. With that, Intel released performance numbers of Llama 3.1 on its latest products, including the Intel Gaudi, Xeon, and AI PCs based on Core Ultra processors and Arc graphics. Intel is continuously working on its AI software ecosystem and the new Llama 3.1 models are enabled on its AI products available with various frameworks such as PyTorch and Intel Extension for PyTorch, DeepSpeed, Hugging Face Optimum Libraries, and vLLM, ensuring that users get enhanced performance on its data center, edge, and client AI products for the latest Meta LLMs.

Llama 3.1 consists of a multilingual LLMs collection, providing pre-trained and instruction-tuned generative models in different sizes. The largest foundation model introduced in Llama 3.1 is the 405B size, which offers state-of-the-art capabilities in general knowledge, steerability, math, tool use, and multilingual translation. The smaller models include the 70B and 8B sizes where the former is a highly performant yet cost-effective model and the latter is a light-weight model for ultra-fast response.

Intel tested the Llama 3.1 405B on its Intel Gaudi Accelerators, which are specially designed-processors for cost-effective and high-performance training and inference. The results show quick response and high throughput with different token lengths, displaying the capabilities of Gaudi 2 accelerators and Gaudi software. Similarly, the Gaudi 2 accelerators show even faster performance on the 70B model with 32k and 128k Token lengths.

Next, we have Intel 5th gen Xeon Scalable processors on the test bench, which show the performance with various token lengths. With 1K, 2K, and 8K token inputs, the token latency is in a close range(mostly under 40ms and 30ms) on both BF16 and WOQ INT8 tests. This shows the quick response of Intel Xeon processors, which possess the Intel AMX(Advanced Matrix Extensions) for superior AI performance. Even with 128K token inputs, the latency remains under 100ms on both tests.

The Llama 3.1 8B inference is quite quick on Intel Core Ultra processors as well when tested on the 8B-Instruct 4-bit Weights model. As tested on Core Ultra 7 165H with built-in Arc graphics, the token latency remains between 50ms and 60ms with 32, 256, 512, and 1024 tokens input. On a discrete Arc GPU like the Arc A770 16GB Limited Edition, the latency comes out to be extremely low, remaining around 15ms with all four different token input sizes.

What's Your Reaction?