Cerebras Intros 3rd Gen Wafer-Scale Chip For AI: 57x Larger Than Largest GPU, 900K Cores, 4 Trillion Transistors

Cerebras Intros 3rd Gen Wafer-Scale Chip For AI: 57x Larger Than Largest GPU, 900K Cores, 4 Trillion Transistors

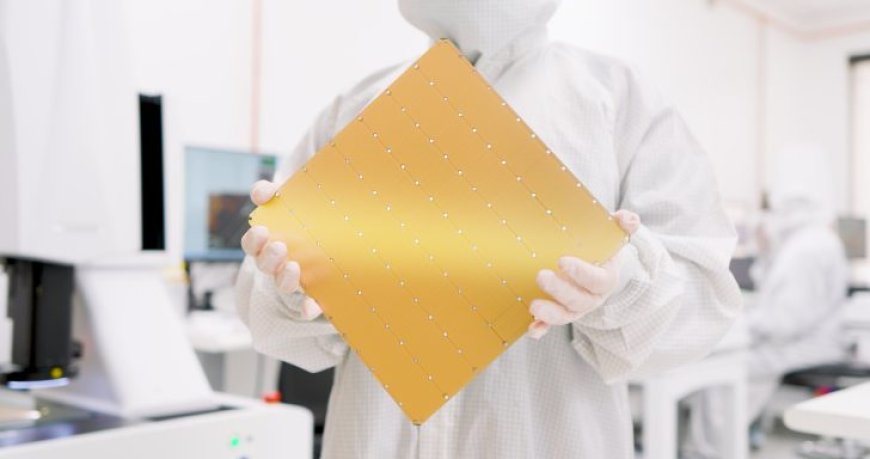

Cerebras Systems has unveiled its third generation wafer scale engine chip, the WSE-3, which offers 900,000 AI-optimized cores built to train up to 24 trillion parameters.

Ever since the launch of its first Wafer Scale Engine (WSE) chip, Cerebras hasn't looked back and its third-generation solution has now been unveiled with unbelievable specifications which should be a given due to its sheer size. As the name suggests, the chip is essentially an entire wafer worth of silicon and this time, Cerebras is betting on the AI craze with some powerful specifications that are highlighted below:

Talking about the chip itself, the Cerebras WSE-3 has a die size of 46,225mm2 which is 57x larger than the NVIDIA H100 which measures 826mm2. Both chips are based on the TSMC 5nm process node. The H100 is regarded as one of the best AI chips on the market with its 16,896 cores & 528 tensor cores but it is dwarfed by the WSE-3, offering an insane 900,000 AI-optimized cores per chip, a 52x increase.

The WSE-3 also has big performance numbers to back it up with 21 Petabytes per second of memory bandwidth (7000x more than the H100) and 214 Petabits per second of Fabric bandwidth (3715x more than the H100). The chip incorporates 44 GB of on-chip memory which is 880x higher than the H100.

Compared to the WSE-2, the WSE-3 chip offers 2.25x higher cores (900K vs 400K), 2.4x higher SRAM (44 GB vs 18 GB), and much higher interconnect speeds, all within the same package size. There are also 54% more transistors on the WSE-3 (4 Trillion vs 2.6 Trillion).

So what's the benefit of all of this hardware? Well, the chip is designed for AI first and offers 125 PetaFlops of peak AI performance. The NVIDIA H100 offers around 3958 TeraFlops or around 4.0 PetaFlops of peak AI performance so we are talking a 31.25x increase here. The chip also comes in a range of external memory configurations ranging from 1.5 TB, 12 TB, and up to 1.2 PB. With so much power in a singular die, the chip can train AI models up to 24 trillion parameters.

In addition to the WSE-3 wafer scale engine chip, Cerebras Systems is also announcing its CS-3 AI supercomputer which can train models that are 10x larger than GPT-4 and Gemini thanks to its huge memory pool. The CS-3 AI solution is designed for both enterprise and hyperscale users and delivers much higher performance efficiency compared to modern-day GPUs.

64 of these CS-3 AI systems will be used to power the Condor Galaxy 3 supercomputer which will offer 8 ExaFlops of AI compute performance, doubling the performance of the system at the same power and cost. The company hasn't shared the pricing or availability of the WSE-3 chips but they're expected to cost a lot and by a lot, I mean a lot more than the $25-$30K asking price for the NVIDIA H100 GPUs.

What's Your Reaction?