Celestial AI Combines HBM & DDR5 Memory To Lower Power Consumption By 90%, Could Be Used By AMD In Next-Gen Chiplets

Celestial AI Combines HBM & DDR5 Memory To Lower Power Consumption By 90%, Could Be Used By AMD In Next-Gen Chiplets

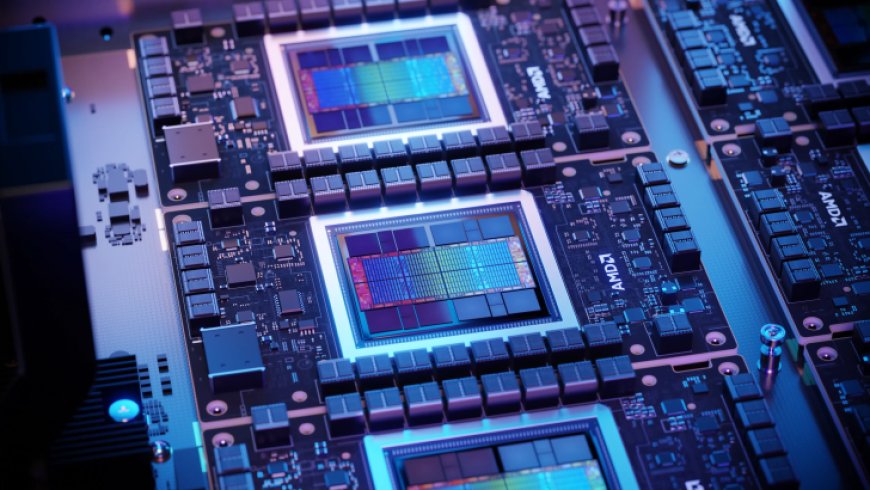

Startup, Celestial AI, has developed a new interconnect solution that utilizes DDR5 & HBM memory to increase the efficiency of chiplets with AMD possibly being among the first to use such a design.

Like semiconductors, generational evolution has become more than necessary for the AI industry, whether in the form of advancements within the hardware segment or the interconnect methods.

Conventional ways of joining thousands of accelerators include NVIDIA's NVLINK, the traditional Ethernet methods, and even AMD's very own Infinity Fabric. Still, they are confined in several ways, not just because of the interconnect efficiency they provide but also because of the lack of room for expansion, which has resulted in the industry finding alternates, one of which is Celestial AI's Photonic Fabric.

In an earlier post, we mentioned the importance of silicon photonics and how the technology, which combines laser and silicon technology, has become the next big thing in the world of interconnects. Celestial AI has leveraged it, harnessing the technology's powers to develop its Photonic Fabric solution.

According to the firm's co-founder Dave Lazovsky, the firm's Photonic Fabric has managed to gain massive interest among potential clients, not only receiving $175 million in the first round of funding but also receiving backing from the likes of AMD as well, which shows how big the interconnect method could turn out to be.

The surge in demand for our Photonic Fabric is the product of having the right technology, the right team and the right customer engagement model.

- Celestial AI co-founder Dave Lazovsky

Moving on to the capabilities of Photonic Fabric, the firm has disclosed that the first generation of the technology can potentially provide 1.8 Tb/sec for every square millimeter, which the second iteration can see a whopping four times increment from its predecessor. However, due to the memory capacity limitations that come into effect with stacking multiple HBM models, the interconnect does get confined to an extent, but Celestial AI has proposed an attractive solution for it, too.

The firm intends to integrate the use of DDR5 memory with HBM stacks since the memory module expansion onboard to achieve a more significant capacity by stacking two HBMs and a set of four DDR5 DIMMS, combining 72 GB and up to 2 TB of memory capacities, which is indeed interesting, considering that with DDR5, you'll get a higher price-to-capacity ratio here, ultimately resulting in a more efficient model. Celestial AI plans on using Photonic Fabric as an interface to link up everything, and the firm labels this method as a "supercharged Grace-Hopper without all the cost overhead."

However, Celestial AI believes that their interconnect solution won't hit the markets by at least 2027, and by then, a lot of competitors in the silicon photonics segment will emerge, which means that Celestial AI won't see an easy time entering the markets, especially after mainstream solutions by TSMC and Intel drop by.

News Sources: TechRadar, The Next Platform

What's Your Reaction?