AMD Talks “1.2 Million GPU” AI Supercomputer, Claims “Sober People” Are Ready To Spend Billions In The AI Race

AMD Talks “1.2 Million GPU” AI Supercomputer, Claims “Sober People” Are Ready To Spend Billions In The AI Race

AMD disclosed that they have received an inquiry to build a massive supercomputer based on 1.2 million data center GPUs, which is an absurd amount considering market dynamics.

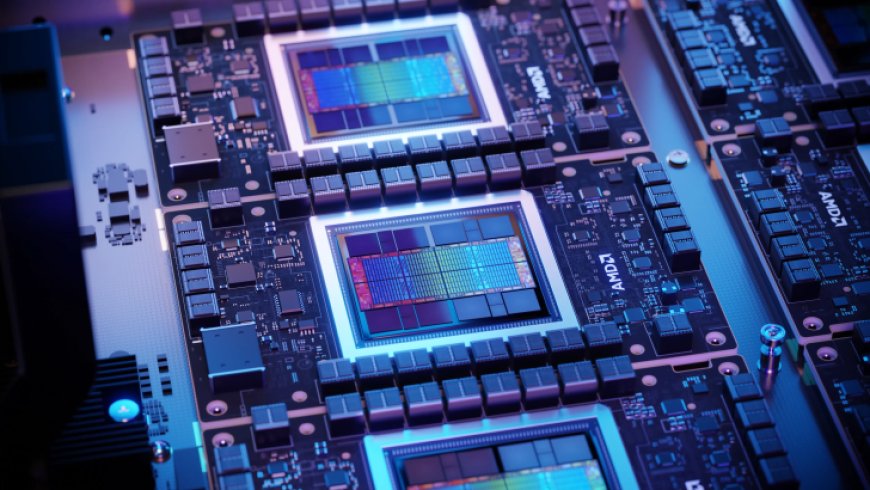

Well, Team Red might have found its next "behemoth" of a client, as the firm says that they might be involved in the building of an AI cluster that houses a whopping 1.2 million GPUs. Speaking with The Next Platform, AMD's EVP and GM of the Datacenter Solutions Group, Forrest Norrod, claimed that AMD has seen inquiries from "unknown clients", that require the supply of a massive amount of AI accelerators, & this was validated after he was asked about whether someone is considering such a venture.

TPM: What’s the biggest AI training cluster that somebody is serious about – you don’t have to name names. Has somebody come to you and said with MI500, I need 1.2 million GPUs or whatever.

Forrest Norrod: It’s in that range? Yes.

TPM: You can’t just say “it’s in that range.” What’s the biggest actual number?

Forrest Norrod: I am dead serious, it is in that range.

TPM: For one machine.

Forrest Norrod: Yes, I’m talking about one machine.

TPM: It boggles the mind a little bit, you know?

Forrest Norrod: I understand that. The scale of what’s being contemplated is mind blowing. Now, will all of that come to pass? I don’t know. But there are public reports of very sober people are contemplating spending tens of billions of dollars or even a hundred billion dollars on training clusters.

Forrest Norrod - AMD EVP (via The Next Platform)

Let's jog your memory a bit. If you still think 1.2 million GPUs isn't a huge amount, the world's current largest supercomputer, the Frontier, utilizes around 38,000 GPUs, and having 1.2 million GPUs onboard means that there's a whopping 30 times gap in graphical computational, just from the GPUs alone which is shocking. And, if you consider just the interconnectivity of such a large graphical stack, it's simply mind-boggling and might be impossible considering modern-day technology.

Do we believe having 1.2 million GPUs in an AI cluster is impossible? Well, no. The reason behind this is that with the way AI is progressing, the need for adequate compute power has grown rapidly, and as Forrest himself says, "sober people" are ready to spend billions in building large-scale data centers to facilitate the demand present in the markets.

If you equip a supercomputer with 1.2 million of AMD's Instinct MI300X AI accelerators, that would roughly cost $18 billion just for the GPUs, if you take a single unit to cost around $15,000. And you aren't even factoring in the power requirements of such a super-cluster. If AI continues to keep on accelerating at the same pace as it is doing right now, then we can expect the emergence of such supercomputers across the globe. It will be a huge investment and will take years to complete but when finalized, these will be some of the fastest computing platforms on the planet.

NVIDIA's CEO Jensen Huang has said that datacenter segment is expected to grow into a trillion-dollar market in the upcoming years, and it was rumored that Microsoft and OpenAI are planning to build a supercomputer worth $100 billion, the Stargate, so a 1.2 million GPUs figure isn't entirely off the record. Will big tech firms choose AMD over NVIDIA? That's the question that only time would answer.

News Source: The Next Platform

What's Your Reaction?