AMD Instinct MI200 CDNA 2 MCM GPU Is A Beast: 1.7 GHz Clocks, 47.9 TFLOPs FP64 & Over 4X Increase In FP64/BF16 Performance Over MI100

AMD Instinct MI200 CDNA 2 MCM GPU Is A Beast: 1.7 GHz Clocks, 47.9 TFLOPs FP64 & Over 4X Increase In FP64/BF16 Performance Over MI100

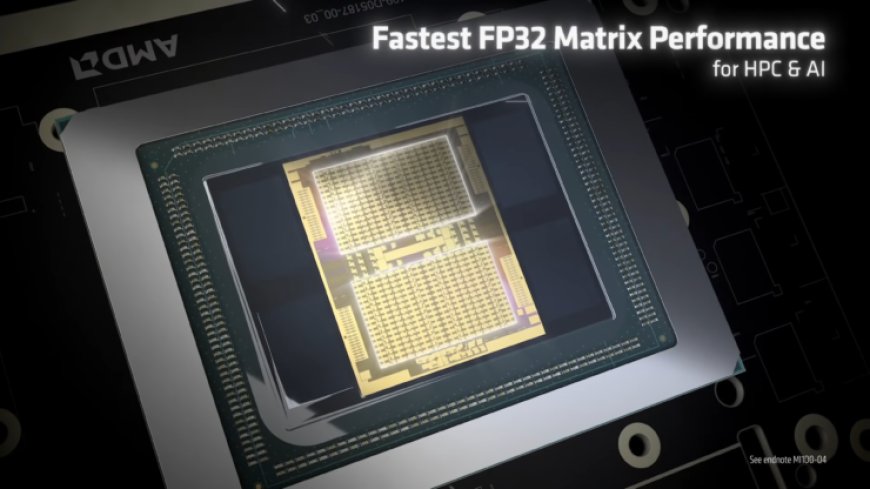

AMD's flagship Instinct MI200 is on the verge of launch and it will be the first GPU for the HPC segment to feature an MCM design based on the CDNA 2 architecture. It looks like the GPU will offer some insane performance numbers compared to the existing Instinct MI100 GPU with a 4x increase in FP16 compute.

Update: ExecutableFix has posted more information and it looks like the Instinct MI200 lineup will include two variants, a standard MI250, and a MI250X. According to the details, the MI250X will get 110 CUs per die (220 CUs in total), 128 GB HBM2e memory, a 500W TDP and will be based on 7nm.

Enough teasing. MI200 has two variants: MI250 and MI250X

MI250X110 CUs, 1.7GHz boost128GB HBM2e500W TDP, 7nm

— ExecutableFix (@ExecuFix) October 23, 2021

We have got to learn the specifications of the Instinct MI200 accelerator over time but its overall performance figures have remained a mystery until now. Twitter insider and leaker, ExecutableFix, has shared the first performance metrics for AMD's CDNA 2 based MCM GPU accelerator and it's a beast.

1.7GHz boost clock, like you said: very high ????

— ExecutableFix (@ExecuFix) October 23, 2021

According to tweets by ExecutableFix, the AMD Instinct MI200 will be rocking a clock speed of up to 1.7 GHz which is a 13% increase over the Instinct MI100. The CDNA 2 powered MCM GPU also rocks almost twice the number of stream processors at 14,080 cores, packed within 220 Compute Units. While it was expected that the GPU would rock 240 Compute units with 15,360 cores, the config is replaced by a cut-down variant due to yields. With that said, it is possible that we may see the full SKU launch in the future, offering even higher performance.

383 FP16/BF16

— ExecutableFix (@ExecuFix) October 23, 2021

In terms of performance, the AMD Instinct MI200 HPC Accelerator is going to offer almost 50 TFLOPs (47.9) TFLOPs of FP64 & FP32 compute horsepower. Versus the Instinct MI100, this is a 4.16x increase in the FP64 segement. In fact, the FP64 numbers of the MI200 exceed the FP32 performance of its predecessor. Moving over to the FP16 and BF16 numbers, we are looking at an insane 383 TFLOPs of performance. For perspective, the MI100 only offers 92.3 TFLOPs of peak BFloat16 performance and 184.6 TFLOPs peak FP16 performance.

As per HPCWire, the AMD Instinct MI200 will be powering three top-tier supercomputers which include the United States’ exascale Frontier system; the European Union’s pre-exascale LUMI system; and Australia’s petascale Setonix system. The competition includes the A100 80 GB which offers 19.5 TFLOPs of FP64, 156 TFLOPs of FP32 and 312 TFLOPs of FP16 compute power. But we are likely to hear about NVIDIA's own Hopper MCM GPU next year so there's going to be a heated competition between the two GPU juggernauts in 2022.

Inside the AMD Instinct MI200 is an Aldebaran GPU featuring two dies, a secondary and a primary. It has two dies with each consisting of 8 shader engines for a total of 16 SE's. Each Shader Engine packs 16 CUs with full-rate FP64, packed FP32 & a 2nd Generation Matrix Engine for FP16 & BF16 operations. Each die, as such, is composed of 128 compute units or 8192 stream processors. This rounds up to a total of 220 compute units or 14,080 stream processors for the entire chip. The Aldebaran GPU is also powered by a new XGMI interconnect. Each chiplet features a VCN 2.6 engine and the main IO controller.

As for DRAM, AMD has gone with an 8-channel interface consisting of 1024-bit interfaces for an 8192-bit wide bus interface. Each interface can support 2GB HBM2e DRAM modules. This should give us up to 16 GB of HBM2e memory capacity per stack and since there are eight stacks in total, the total amount of capacity would be a whopping 128 GB. That's 48 GB more than the A100 which houses 80 GB HBM2e memory. The full visualization of the Aldebaran GPU on the Instinct MI200 is available here.

What's Your Reaction?